AI Testing: Deliver better software, faster

Software testing is an information service. Its goal is to provide stakeholders with objective information about the defects present in their system. A software defect is anything in the code, configuration, data or specification that can decrease the value of software to its stakeholders. Neglecting software testing translates to making high-stakes decisions without sufficient intelligence.

The effectiveness of an information service can be judged based on its accuracy, relevance, and accessibility. Improving software testing implies making it progressively better at detecting and interpreting defects, whilst reducing the timeframes and costs.

The growth of available digital data and computational capabilities has enabled improvements in efficiency, augmentation, and autonomy across industries through the use of subsymbolic AI. In software testing, using AI helps harness the power of big data analytics, enhance the generation of test ideas and the interpretation of test results. Smart execution capabilities contribute to full-scale AI adoption in software testing.

More on AI-enabled test case generation

Our AI Testing approach has been proven across capital markets. It offers tangible enhancements to our clients’ automated testing of matching engines, market data, market surveillance, clearing and settlement systems worldwide. The approach has also helped decrease their time to market, maintain regulatory compliance and auditability of test results, improve scalability, latency, and operational resiliency.

AI Testing is domain-agnostic. It is applicable to a variety of business use cases driven by distributed, mission-critical systems. Typically supported by protocol-based interactions and a multi-channel architecture, such systems are prone to errors that can have cascading effects, causing interoperability, concurrency, latency, and other complex issues.

Exactpro is well-positioned to test traditional, as well as hybrid and AI-based systems. The information we provide is the result of deep, independent, and comprehensive AI-enabled exploration of the system, its components and their interdependencies.

Case Studies: Machine Learning in Software Testing

Whether you are a banking or payments infrastructure operator looking to streamline your ISO 20022 migration, an insurance or risk management technology provider deploying AI for claims, underwriting or fraud detection, or a shipping and logistics innovator disrupting the technology behind dynamic route optimisation – we provide the AI and testing know-how to drive your innovation efforts. This section introduces you to the AI Testing use cases that we have successfully implemented. We look forward to expanding the scope of the approach to other domains and use cases.

Trading Systems

Investment Banking Systems

Clearing and Settlement Systems

Retrieval-augmented Generation (RAG) systems

Your platform

You received an AI mandate. What happens next?

Many financial organisations today have an explicit or an implicit AI mandate. But we all know that this technology transition is unlike any other… AI models are non-deterministic, which means monitoring, validation and ‘humans-in-the-loop’ become ongoing responsibilities rather than one-time-setup tasks. On top of that, there are transparency, interpretability and explainability challenges to mitigate.

A pattern we see across the industry is AI adoption originating in QA, particularly, in software testing. It provides teams with immediate operational benefits while keeping risk exposure low and builds a practical understanding of how AI behaves before it reaches customers or markets. This foundational knowledge can then be transferred across the enterprise and serve more use cases and projects.

At Exactpro, we work with regulated fintech teams that take this approach, helping them apply AI safely and deliberately in practice. This can start as a small pilot, a focused testing engagement or an effort to build internal AI capability and governance discipline.

Are you looking to discuss your AI integration strategy?

AI Testing training for teams Practical guidance on AI systems From AI basics to excellence

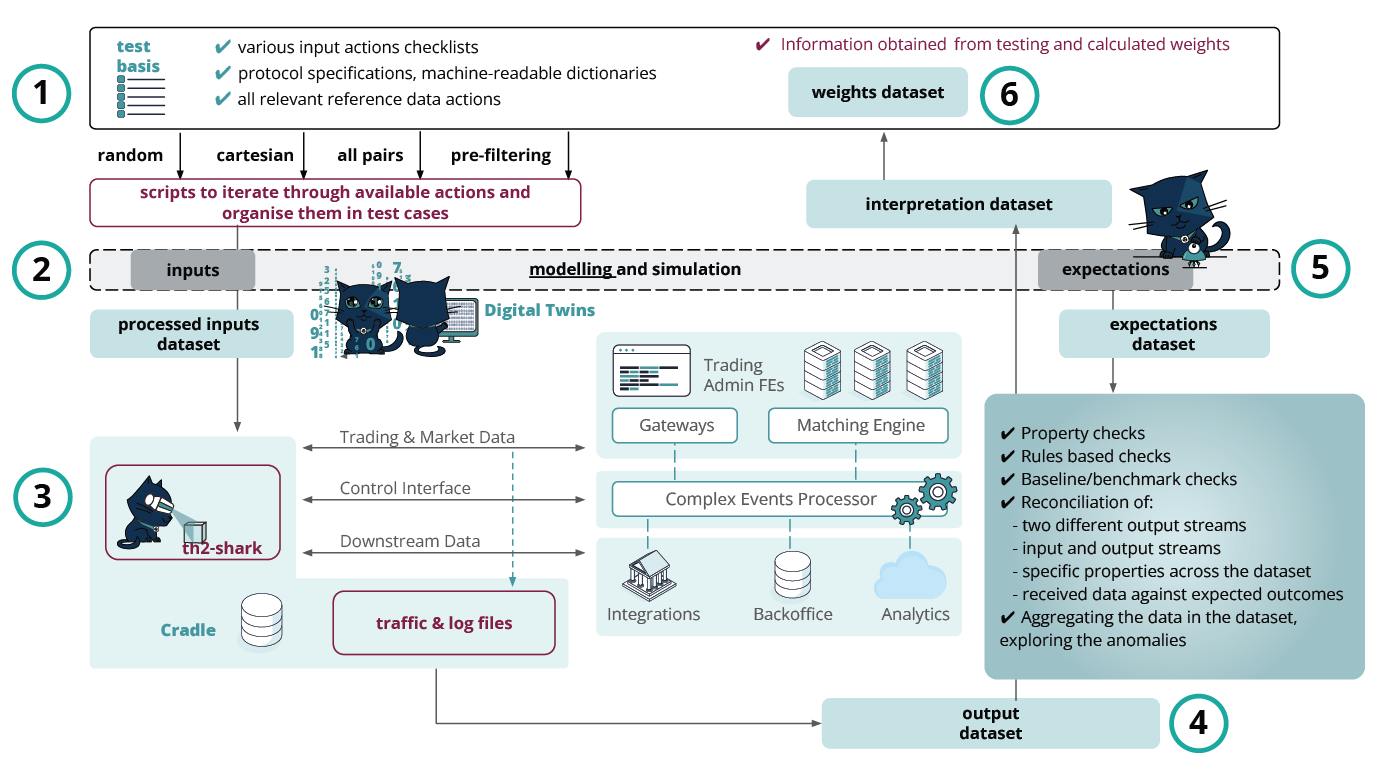

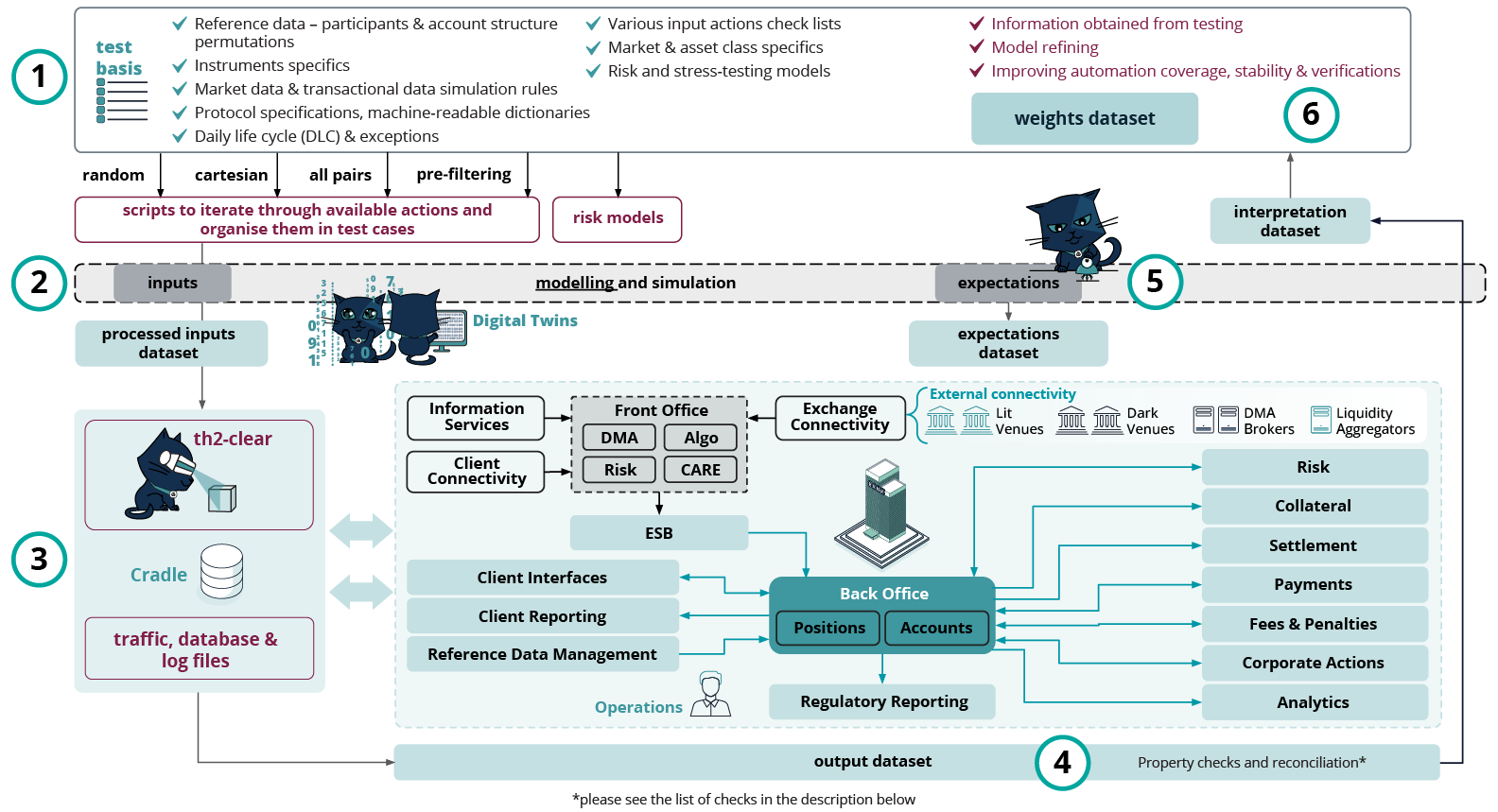

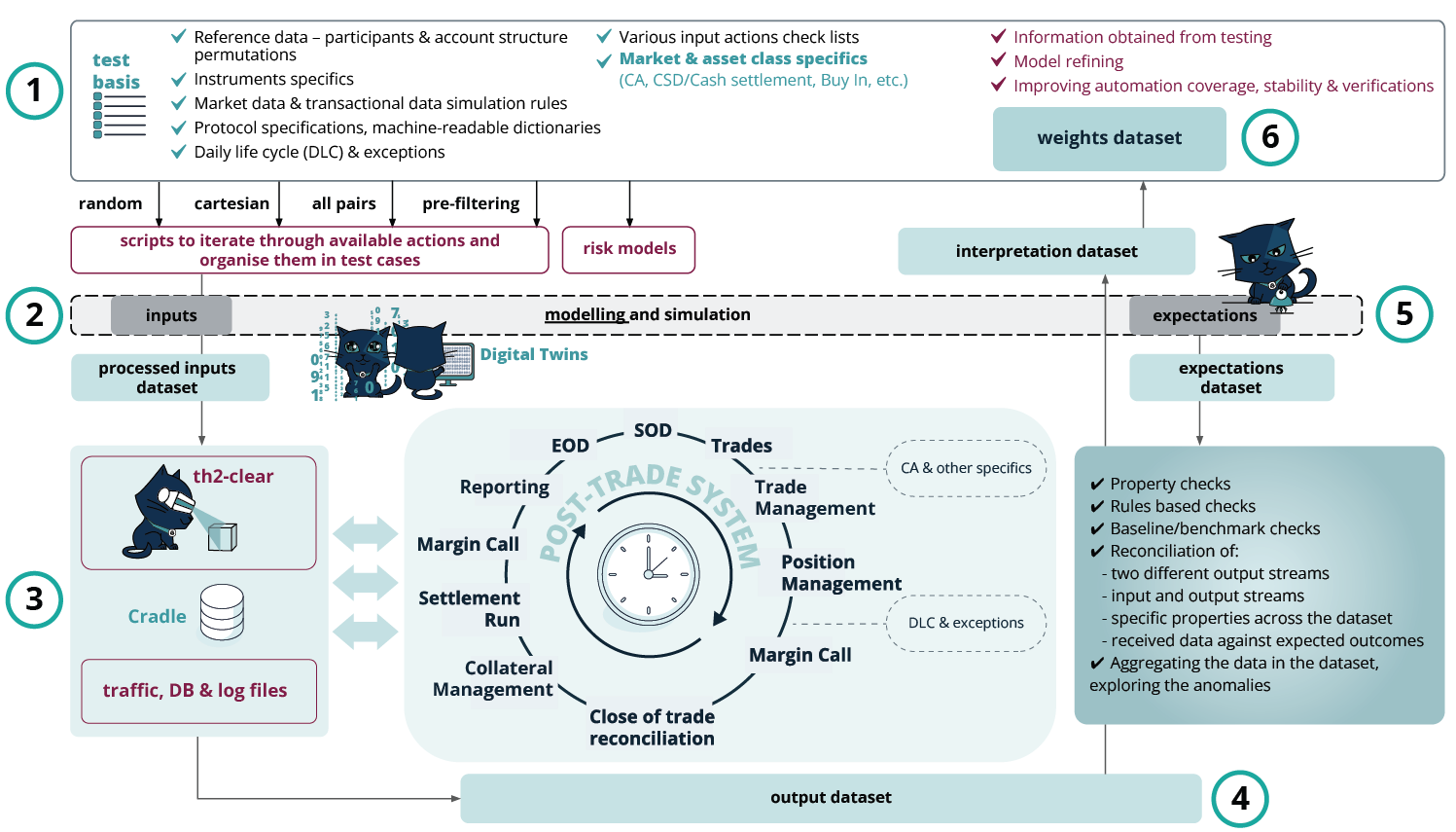

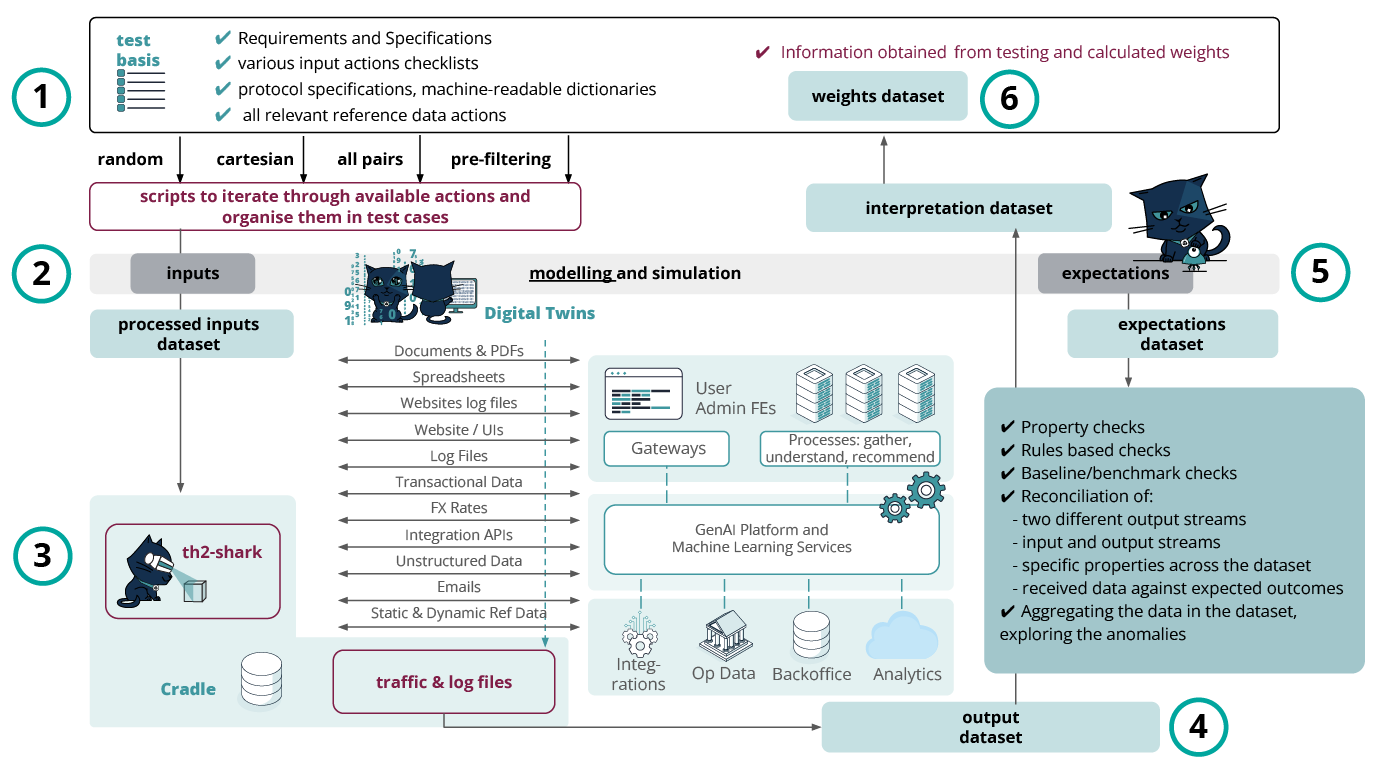

- Test basis analysis – analysing the data available from specifications, system logs and other formal and informal sources, normalising it and converting it into machine-readable form.

- Input dataset & expected outcomes dataset – the knowledge derived from the test basis enables the creation of the input dataset used further for test execution. It also lays the foundation for modelling a ‘digital twin’ of the SUT – a machine-readable description of its behaviour expressed as input sequences, actions, conditions, outputs, and flow of data from input to output. The resulting expected values dataset further helps reconcile the data received from the SUT during testing with the pre-calculated expected values.

- Test execution, output dataset – all possible test script modifications are passed on for execution to Exactpro’s AI-enabled framework for automation in testing. The framework converts the test scripts into the required message format, and, upon test execution, collects traffic files for analysis in a raw format for unified storage and compilation of the output dataset.

- Enriching the output dataset with annotations – the ‘output’ dataset is attributed annotations for interpretation purposes and subsequent application of AI-enabled analytics. This also enables the development of the interpretation dataset – a dataset containing aggregate data and annotations from the processed ‘output’ and ‘expected outcomes’ datasets in Steps 4 and 5.

- Model refinement – this step plays a major role in the development of the ‘digital twin’. Property and reconciliation checks in iterative movements between Steps 4 and 5, the model logic is gradually trained and improved to provide a more accurate interpretation of the ‘output’ dataset and to produce updated versions of the ‘expected outcomes’ and ‘interpretation’ datasets.

- Test reinforcement and learning. Weights dataset – discriminative techniques are applied to the test library being developed, to identify the best-performing test scripts and reduce the volumes of tests required for execution to the minimal amount that, at the same time, covers all target conditions and data points extracted from the model.

The final test library is a subset of select scenarios fine-tuned until the maximum possible level of test coverage is achieved with the minimal reasonable number of checks to execute. Carefully optimising a subset of test scripts results in achieving a significantly more performant and less resource-heavy version of the initially generated test library.

Unified storage of all test data in a single database enables better access to test evidence and maximum flexibility for applying smart analytics, including for reporting purposes. The final test coverage report helps demonstrate traceability between the requirements and the test outcomes – an important auditability and compliance criterion.

Automation is the technology by which a process or procedure is performed with minimal human assistance. Software testing is a process of empirical technical exploration of the system under test that consists of selecting a subset of possible actions, executing them, observing and interpreting the outcome. With modern CI/CD pipelines, it is easy to minimise human involvement in the execution part. Automating higher-level cognitive functions such as test idea generation or results evaluation is, of course, a much less straightforward task.

Test basis is the body of knowledge about the system used for test analysis and design. The AI testing process includes the following steps:

Converting parts of the test basis into machine-readable form and annotating the obtained data for Supervised Learning.

Improving and optimising the input dataset with Reinforcement Learning, based on the extracted features.

Using synthetic test data generators to produce substantial datasets.

Running arbitrarily many computer-assisted checks and applying scripted rules and Unsupervised Learning to extract features from the output dataset.

We are all good – we have teams and procedures in place to take care of all our testing needs.

Applying data analytics to various levels of an existing process enables us to boost its effectiveness. A continuous improvement cycle is created as a result of AI integration.

The better a process is organised and is performing, the more it can be improved with AI testing. Process deficiencies can be detected and rectified in an ongoing improvement loop.

Our system is too complex and specific, approaches developed for other systems or domains will not work here.

Complex transaction processing systems share similarities: they have to take into account a wide variety of configuration data and to process lots of transactional data, high resilience (availability) requirements and a complex operational life cycle are also commonplace among them. The data annotation process may vary for different types of such systems, but the rest of the data processing can be the same.

Breakthroughs tend to happen at the confluence of different disciplines. Any individual team can benefit from the latest technology achievements and use expertise from related domains to its advantage.

Your proposal is too complex for our people.

Experiments and training in machine learning, data processing and software testing can amplify your team’s expertise and add value to aspects of your organisation’s work that go beyond software testing.

We can show your teams the best practices of using the new technologies and work with you to build your skills.

Our business is too small, our quality requirements are not that rigorous, and we do not have the budget.

Software testing is context-dependent. Let us assess your system setup and development process. Integrating AI into the testing pipeline brings down costs and introduces efficiencies. AI-powered test data generation also enables mitigating the lack of data.

Businesses of any size can and should reap the benefits of new technologies. Early-stage initiatives can also use our Venture Program.

We are too early in our journey, our development has just started.

Early-stage system modelling and data analysis are processes that positively affect architecture design and development of your application. Alignment between development and testing is key in delivering better solutions faster.

Starting testing as early as possible helps secure a solid foundation, it also ensures a smooth development process and product quality by design.

If you want to know more about leading industry expertise in software testing or are looking to leverage AI to boost your software development processes, set up a virtual meeting with our executives and technology experts.