Functional Testing

Functional testing verifies that a specific functionality of a component or a system works as expected and helps to describe what the system does, as opposed to non-functional testing which examines how the system performs the required functions.

In functional testing, the test plans should comply with the system requirements or business specifications. However, experience shows that some systems may lack clear requirements, instead having ambiguity or contradictions, or even functionalities and parts of the system missing in their description. Therefore, it is always advisable to have a well-detailed test plan, even for small projects. Otherwise, a lot of issues may be missed or not reported properly.

We start the planning with:

- defining the existing constraints (e.g. time, money, technologies, human resources)

- determining the exit criteria for our activities (when we can say that our work is done)

- confirming the plans with all the parties involved in the project (e.g. product managers, developers, clients)

We move on to creating the first test scenarios

It will help us to structure what we are planning to do, evaluate estimates and report our activities.

In case of a complex system, a high-level plan should be created first, to allocate testing areas and responsible persons/teams.

Then, we are sequentially going deeper, to figure out certain scenarios of usage within a particular area. As a result, we get test cases or check lists, grouped into test suites.

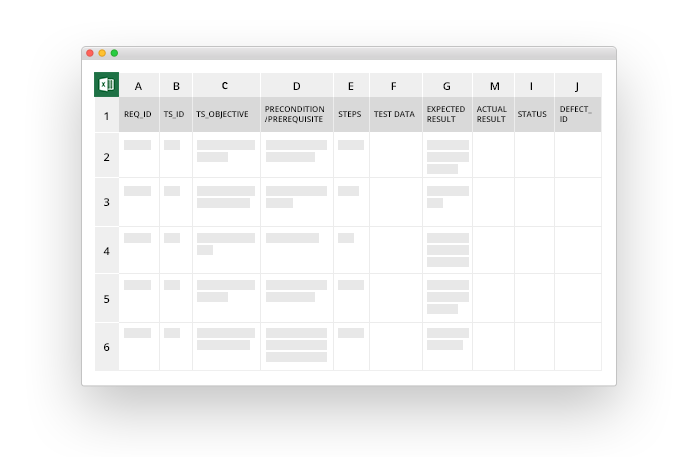

A test case is a detailed description of a certain check which usually contains the test summary, the test steps, the expected and the actual results.

It takes some time to create detailed test cases, but it is especially beneficial in the context of a long-term project with a predictable employee turnover, where the newcomers can rely on them to provide clear and relevant information on the project. However, when there is a short project with a team consisting of just a few experienced testers, checklists are preferred, to save time.

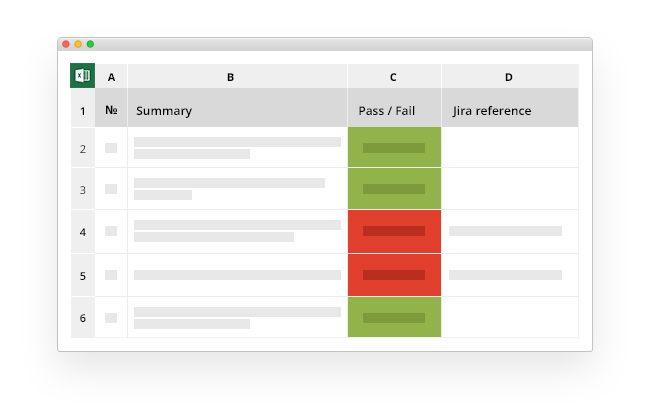

A checklist is simpler than a test case and takes less time to prepare, but still contains the list of the planned checks and can be used as a template for reporting the testing activities.

With the scenarios ready, it is time for their execution and verification of results

Note that the basic checks about the most important functionalities should be run before more complicated cases, because it allows to find and address problems in the most important functionalities earlier. This is especially reasonable for smoke and regression testing.

According to the most popular software development models, all work should be split into several iterations. Each iteration usually contains:

- new functionalities

- existing functionalities

- fixes for the known issues

So each time a new software version arrives, we should:

- create new test cases for the newly delivered functionalities

- run the previous test cases for the existing functionalities

- verify that the previously reported problems were fixed

Smoke Test. Retesting. Regression Testing.

Once a new version comes, a smoke test is run. A smoke test is a small set of basic checks that only takes 1-2 hours to perform. It is used to see if important system functions are broken before giving it back to DEV.

If a smoke test is successful, the next step is retesting. Retesting is a process of performing tests from the list of the fixed issues which are performed to make sure that the fixes were done properly. It is possible that new or previously fixed issues will pop up during retesting.

If there are no critical problems - also known as blockers - which prevent the system from performing its basic functions, the testing moves on to Regression testing. Regression testing is the execution of a wide range of tests which are aimed at making sure that the previously working functionalities and features are operational. If regression testing is successful, the current version of the system is considered stable. In either case, a test report with a brief description of all the checks and encountered issues should be sent to all stakeholders.

The fact that there can be a new version of the software every few weeks, and that thousands of tests have to be run each time, raises a reasonable question of test automation.

EXACTPRO = EXPERIENCE

Exactpro has a variety of tools to test different interfaces, like:

- Windows GUI applications (Jackfish)

- Web GUI applications (Jackfish)

- Specific financial interfaces, based on FIX, MITCH, ITCH, SWIFT, FAST, Native protocols (Sailfish, ShSha, Minirobots, ClearTH)

The test scripts are created in a simple Keyword-driven language and are easy to maintain and support. More information about our test tools and their features is available here.

It is also a good practice to combine functional testing with other background activities. Some kinds of issues can only be found when several transactions are being performed simultaneously, or when automated scripts (Minirobots) are running in parallel. Some issues can only be revealed as a result of combined effort of several teams. Needless to say, these processes require the QA teams to have a high degree of experience and a good understanding of the domain areas and the business logic.

Inevitably, we will always confront unexpected scenarios, like ambiguities and lack of specifications, but we have an established practice of using all the available sources of information, like communication with developers, managers, clients, checking the requirements of similar systems or any related specifications available in open access.

Exactpro has years of experience in QA and an array of successful projects under the belt.