Date:

Financial markets have long relied on technology to be fast, resilient, and trustworthy – for compliance purposes, but also due to a shared understanding of the systemic impact of financial instability in interconnected infrastructure. Matching engines, market data, and clearing and settlement systems undergo rigorous testing because livelihoods, economies, and market integrity depend on them. With AI-enabled systems becoming embedded in enterprise-level tasks – across sectors, but in finance in particular – the safety guardrails should be as strong as ever, if not stronger.

Compared to traditional financial technology, which is deterministic by design and non-deterministic at the system-outcome level due to a multitude of dynamic inputs and interactions, AI systems – especially those with generative AI – are stochastic by design. This not only complicates quality assessment but also raises the stakes in cases of malfunction as AI increasingly starts to inform analytics, reporting, compliance, and decision-making. Nonetheless, the exchange community is exceptionally well-positioned to lead the way in responsible development and deployment of reliable enterprise AI across a wide variety of financial use cases. Quality assessment tools and methods used in financial market infrastructures (FMIs) have long mitigated non-determinism and complex, distributed technology, all while helping deliver accuracy, resilience, and fairness globally.

Quality frameworks in FMIs are not just grounded in expert domain knowledge but rely on specialised frameworks (e.g., the Zero Outage Industry Standard1 and the Digital Operational Resilience Act (DORA)2). Such comprehensive documents are, at the same time, results of a large-scale collaborative effort and, in their messaging, vocal advocates for continuous cross-sector work for the sake of reinforced operational and cyber resilience across diverse sectors and fields of knowledge.

As a technology services provider directly involved in helping exchanges, post-trade system operators, and banks enhance the operational resilience of their systems, we have recently contributed to the AI quality discourse. In our ‘Test Strategy and Framework for RAGs3 case study, we transpose the software testing approach historically tailored for smart order router (SOR) systems (an inherent part of algorithmic trading) to Retrieval-Augmented Generation (RAG) systems. The former represents a more traditional financial platform and the latter an AI-enabled infrastructure with a generative AI component. Similarities between SORs and RAGs that substantiate the cross-applicability of the approach include:

- reliance on context, external sources, and outcome optimisation based on a combination of external and internal factors;

- retrieval logic applied to monitor relevant parameters;

- triage and ranking logic applied to the source venues/documents;

- dynamic nature, non-determinism, real-time decision-making;

- separation of the interconnected information retrieval and action modules;

- reliance on historical data to improve future outcomes.

‘Retrieval-augmented generation’ can be defined as a technique for enhancing the accuracy and reliability of generative AI models with information from specific and relevant data sources. This year’s WEF Artificial Intelligence in Financial Services4 white paper places RAGs among the key technology areas moving forward (alongside small language models, AI agents, and quantum computing). Due to enhanced ‘accuracy and reliability’ (compared to LLMs) and reliance on curated ‘in-house data repositories’, RAGs are likely candidates for widespread enterprise adoption, which makes developing their testing a strategic priority.

RAG-driven generative AI shares the general features of SORs, while also exhibiting distinctive operational features. Their outputs – and, thus, training, testing, and validation methods applied to them – are more heavily dependent on domain specifics and the context in which they operate. Their cross-domain transferability is also much higher than that of SORs. Nevertheless, both system types can benefit from the same software quality assessment principles and universal recommended strategies practiced in the industry (a more detailed comparison of SOR-RAG technology features and the testing approach is provided in our case study).

Fig 1. Comparison of key architecture components and end-points of SOR and RAG systems

The quality assessment of a RAG-enabled system should include the same test levels as the one for smart order routers: testing of input data, system testing (comprising component testing, system-integration testing, and other related sub-processes), and acceptance testing. Different test techniques and perspectives that cut across all levels should be applied. These include white-box, black-box, and data-box techniques (detailed descriptions of the techniques are provided in our case study). The principles of model-based testing (creating a system’s ‘digital twin’) should also be relied on as the core methodology, drawing from industry-proven methods.

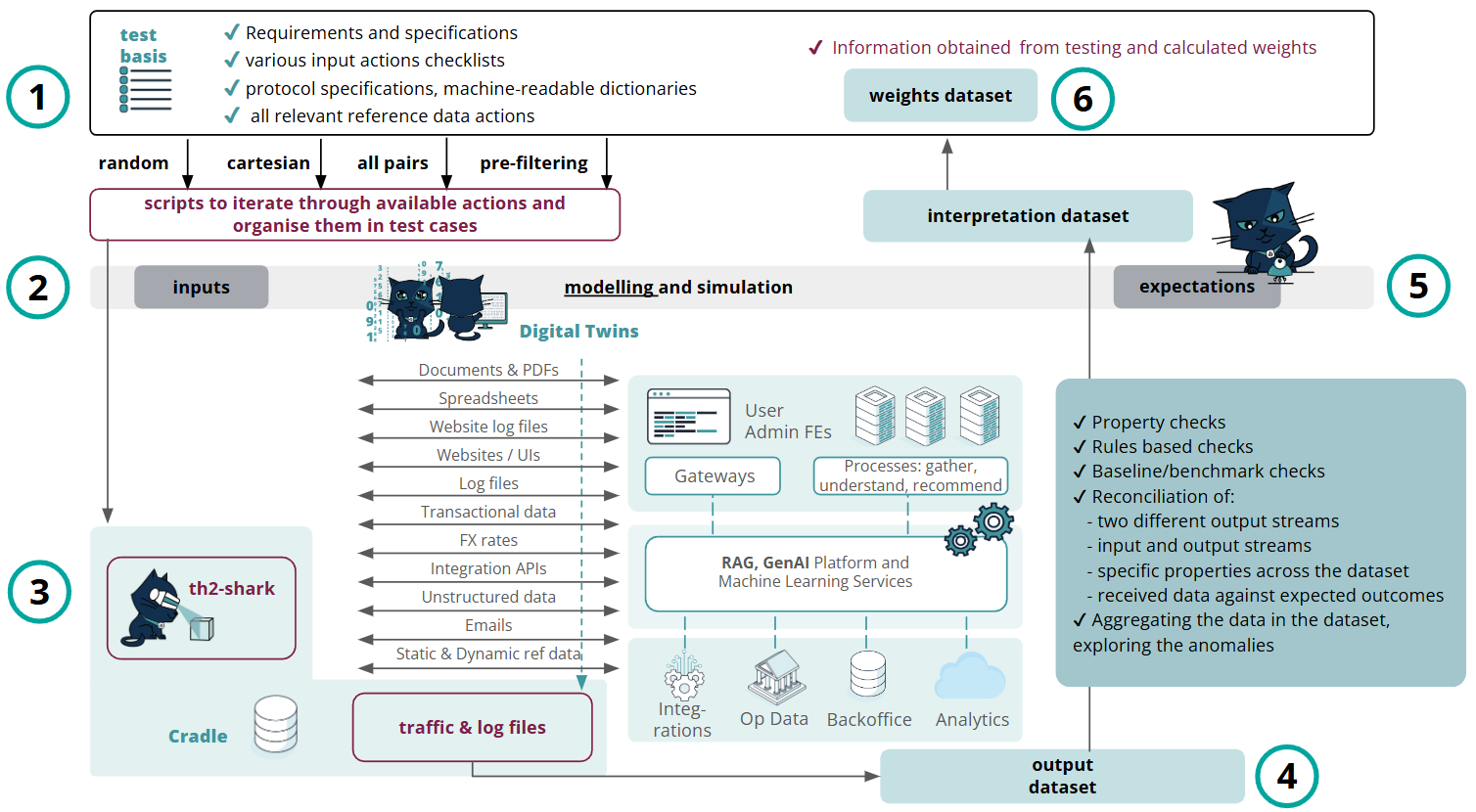

A test approach recommended for RAG systems (as well as for SORs) should be grounded in multi-layering and involve similar principles and steps, except for the difference in the data types used as input. Thus, the generalised system-agnostic approach that can be successfully applied to RAGs can be subdivided into six steps:

- Test-basis analysis – analysing the data available from specifications, system logs, and other formal and informal sources, normalising it, and converting it into a machine-readable form.

- Generation of the input dataset and the expected outcomes dataset – the knowledge derived from the test basis enables the creation of the input dataset used further for test execution. It also lays the foundation for modelling a system’s ‘digital twin’ – a machine-readable description of its behaviour expressed as input sequences, actions, conditions, outputs, and the flow of data from input to output.

- Test execution, obtaining an output dataset – all possible test script modifications are sent for execution within an AI-enabled framework for automation in testing. The framework converts the test scripts into the required message format, and, upon test execution, collects traffic files for analysis in a raw format for unified storage and compilation of the output dataset.

- Enriching the output dataset with annotations – the ‘output’ dataset is annotated for interpretation purposes and subsequent application of AI-enabled analytics.

- Model refinement – this step plays a major role in the development of the ‘digital twin’. Property and reconciliation checks happen in iterative movements between Steps 4 and 5; the model logic is gradually trained and improved to provide a more accurate interpretation of the ‘output’ dataset and to produce updated versions of the ‘expected outcomes’ and ‘interpretation’ datasets.

- Test reinforcement and learning, developing a weights dataset – discriminative techniques are applied to the test library being developed to identify the best-performing test scripts and reduce the number of tests required for execution to the minimal amount that, at the same time, covers all target conditions and data points extracted from the model. Unified storage of all test data in a single database enables better access to test evidence and maximum flexibility for applying smart analytics, including for reporting purposes.

Fig 2. Exactpro’s approach to RAG systems testing (based on the recommended practices for SORs)

It is widely recognised that high-quality AI is crucial for enterprise deployment. That is why it is especially important to adopt an approach that is precisely calibrated to the financial domain, sensitive to the caveats, constraints, and regulatory boundaries, and laser-focused on operational resilience in an environment of high systemic risk. It should also be an approach that is flexible enough to fit – and be informed by – multiple use cases and innovative enough in itself to match the new levels of complexity, automation, and depth of system exploration requirements – all without system operators having to ‘reinvent the wheel’ or introduce major changes to in-house teams or toolsets.

Getting insights from an independent software testing perspective contributes to a deeper understanding of the system and its technology – and, most importantly, its limitations – for system operators. Such insights can be used for better planning and strategic prioritisation at the early stages of a new project, as well as during ongoing projects, in technology migrations, and prior to launches of brand new systems. AI-enabled software testing is recognised to provide more extensive test coverage across data and workflows, more streamlined interpretation of test results, and a more efficient, resource-optimised test library, compared to more traditional testing methods.

Engaging AI-enabled testing as a managed service or a capability-building programme on either traditional or emerging financial technology implementations provides access to cutting-edge know-how – not as an experiment, but as an industry-tested service proven to deliver tangible improvements in capacity, reliability, test coverage, and time-to-market. In the context of AI-enabled systems under test, the methodology helps embed transparency, reproducibility, and safety in AI-driven decisions, fostering greater confidence in their real-world applications.

Learn more about Exactpro’s AI Testing approach and our AI Testing Excellence offering for your organisation by reaching out to us via info@exactpro.com.

Contact the authors for a more detailed discussion at:

Alyona Bulda, alyona.bulda@exactpro.com

Daria Degtiarenko, daria.degtyarenko@exactpro.com

- Zero Outage Industry Standard (ZOIS). URL: https://zero-outage.com/.

- Digital Operational Resilience Act (DORA), Regulation (EU) 2022/2554 (2022). URL: https://www.eiopa.europa.eu/digital-operational-resilience-act-dora_en

- Exactpro (2025). Test Strategy and Framework for RAGs – Based on Recommended Practices in AI-enabled Testing of Algorithmic Trading / Smart Order Router Systems. URL: https://exactpro.com/case-study/Test-Strategy-and-Framework-for-RAGs

- World Economic Forum (2025). Artificial Intelligence in Financial Services, White paper. URL: https://reports.weforum.org/docs/WEF_Artificial_Intelligence_in_Financi…

Alyona Bulda and Daria Degtiarenko, Head of Global Exchanges, SVP, Technology and Senior Marketing Communications Manager, Exactpro