Date:

Introduction

As regulated entities vital for the financial markets ecosystem, CCPs and exchanges recognize the importance of quality and resilience of their platforms. Thorough software testing is fundamental in identifying problems that can affect system integrity. Software testing encompasses Functional Testing which ensures that the system works according to specifications and satisfies the compliance requirements, and Non-functional Testing spanning the assessment of performance, latency, capacity, reliability and operability. Test automation decreases time to market and boosts verification coverage.

A popular verification approach used across the industry – not without some merit – is a parallel run comparing the current production system and a new release, this is also known as ‘production data replay’. However, overreliance on this method puts firms at a disadvantage when delivering significant changes into live service.

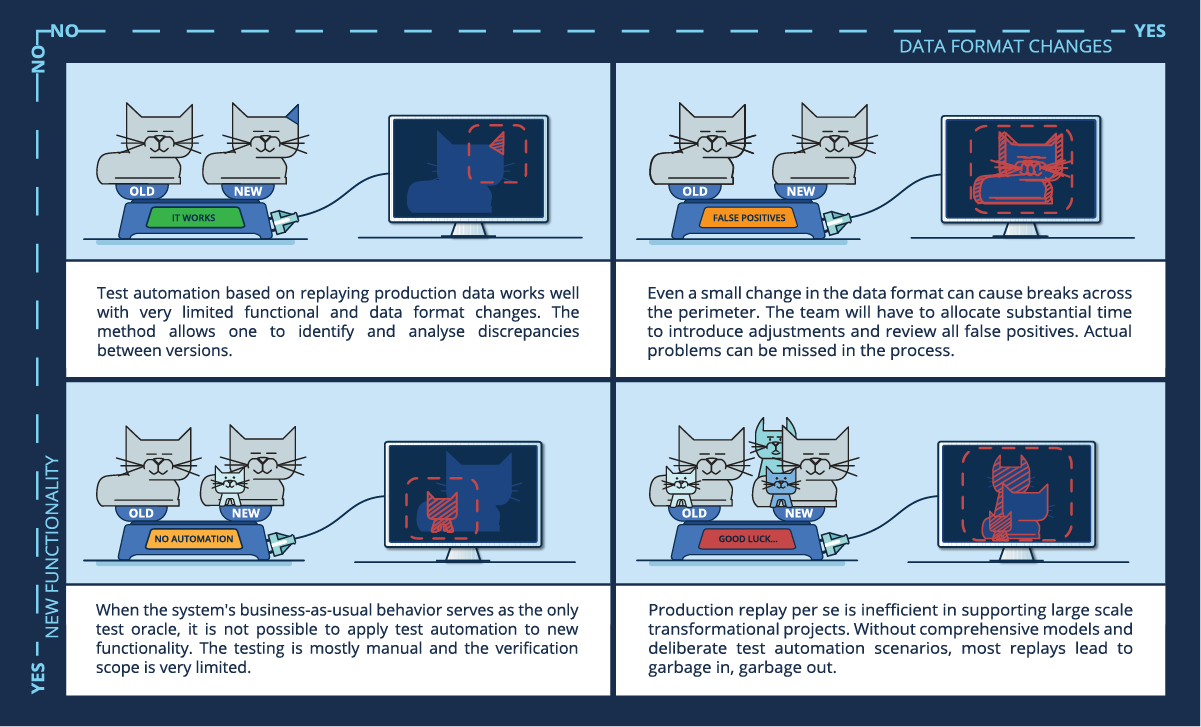

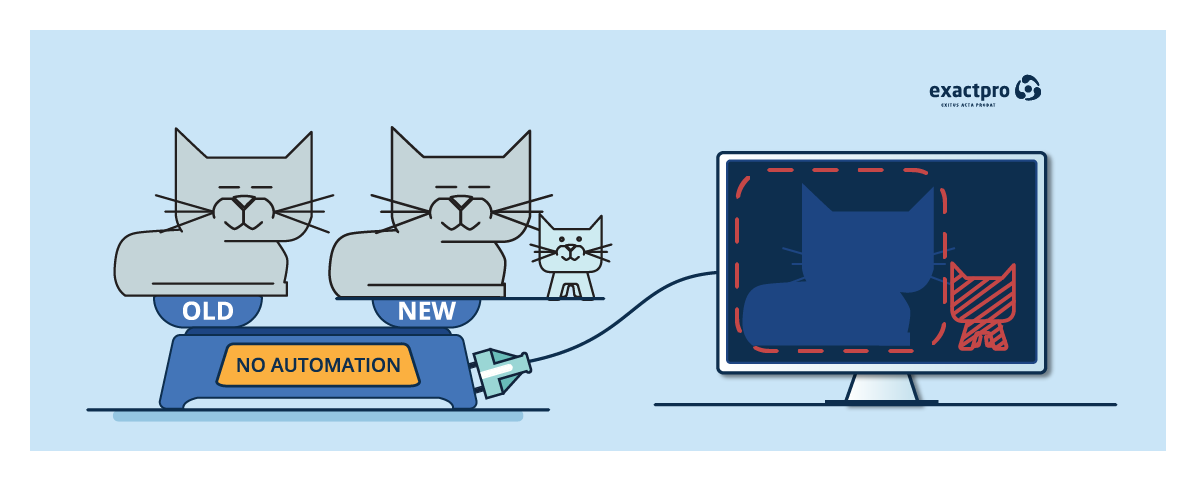

NO/NO – Insignificant Data Format and Functionality Changes

In test automation based on replay, the same set of input data – that can be taken from production or saved for testing purposes – is replayed against the existing and the next versions of the system. Reportedly, the method allows one to identify and analyse the discrepancies between versions. The output data gets compared at the end of such a run. The data is expected to either have no discrepancies or have so few that a QA analyst will be able to analyse them and determine whether these are expected or regression bugs.

This approach refers to a certain ‘old’ version of the system as the only test oracle. Thus, if the system had previously contained outstanding issues, there will be no way to reveal them and expect a different (better) outcome from the comparison. In fact, the correct functioning of the system may be perceived as a bug. This way, defects can persist in the system for years and not be detected. We can admit, however, that data replay can work well with very limited functional and data format changes.

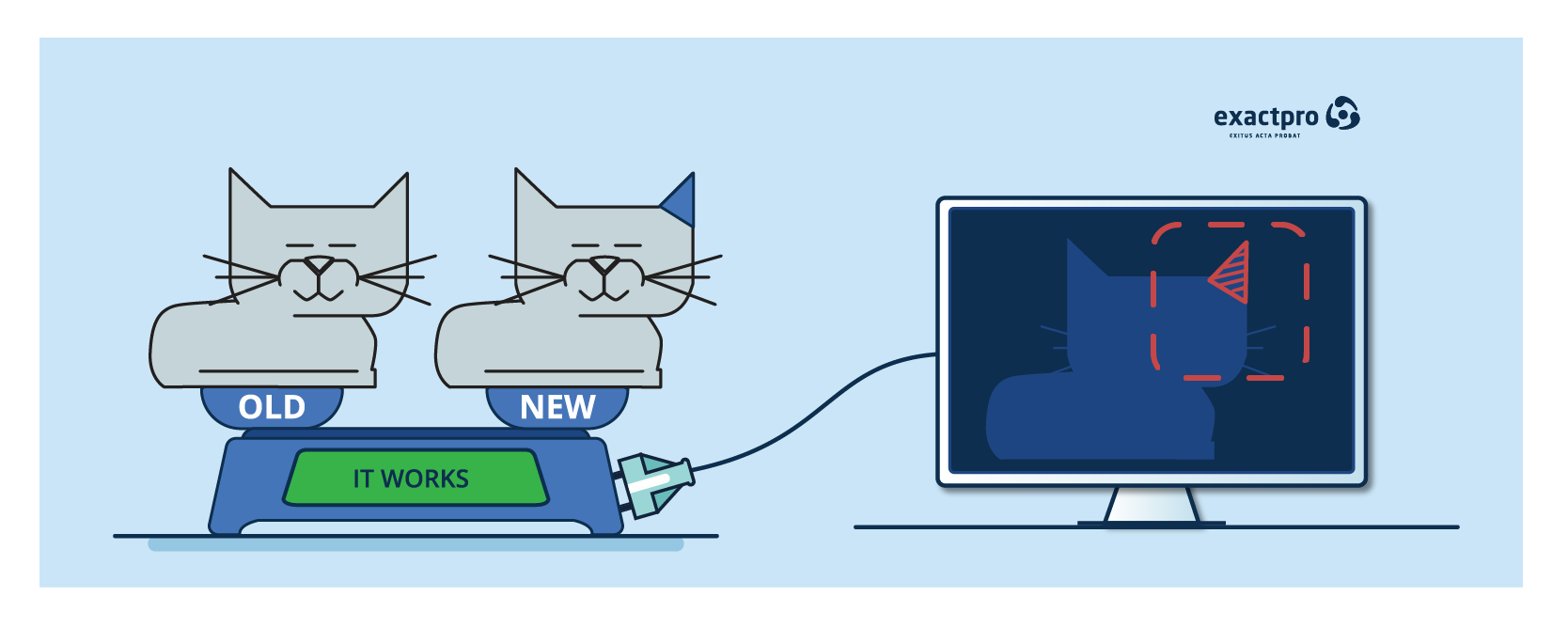

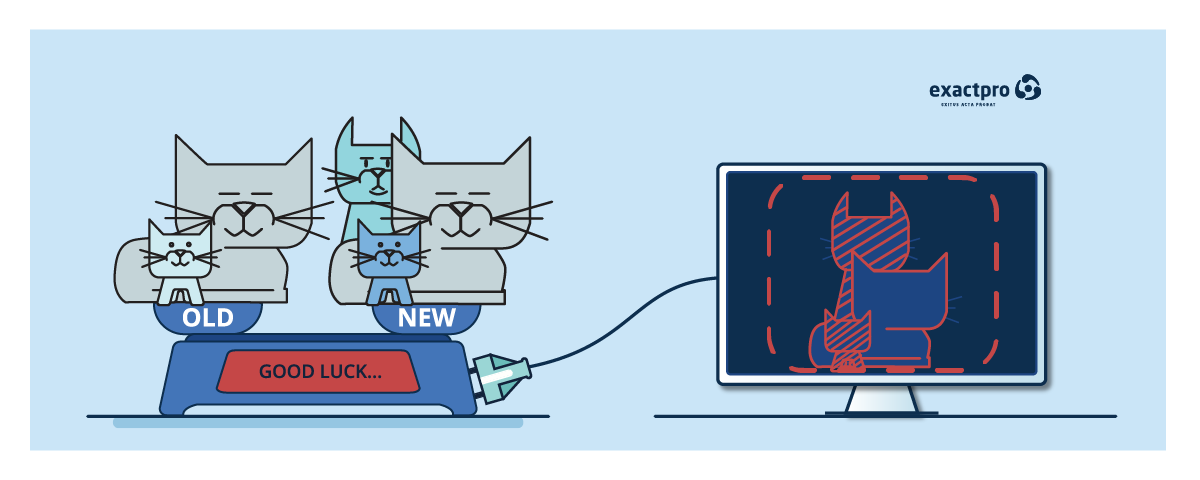

YES/NO – Significant Data Format Changes and Minor Functionality Updates

Let’s say that the two versions of the system under test are two different images. Every output data element is a pixel in the picture. Using replay and parallel runs is similar to pixel-by-pixel comparison. At times, it works well: the pixels remain where they are and few discrepancies are detected. But what if, instead of changing, the picture has slightly shifted? Despite the absence of significant changes, we will detect major discrepancies between the two pictures.

In software testing based on data replay, even a small change in the data format can cause breaks across the perimeter. The team will have to dedicate a substantial amount of time to the manual introduction of adjustments and reviewing all false positives, while overlooking the actual problems. In this Trading Day Logs Replay Limitations and Test Tools Applicability research paper, we demonstrate that a non-deterministic outcome can take place even without any differences in the input data, due to the distributed nature of the systems used in trading and clearing.

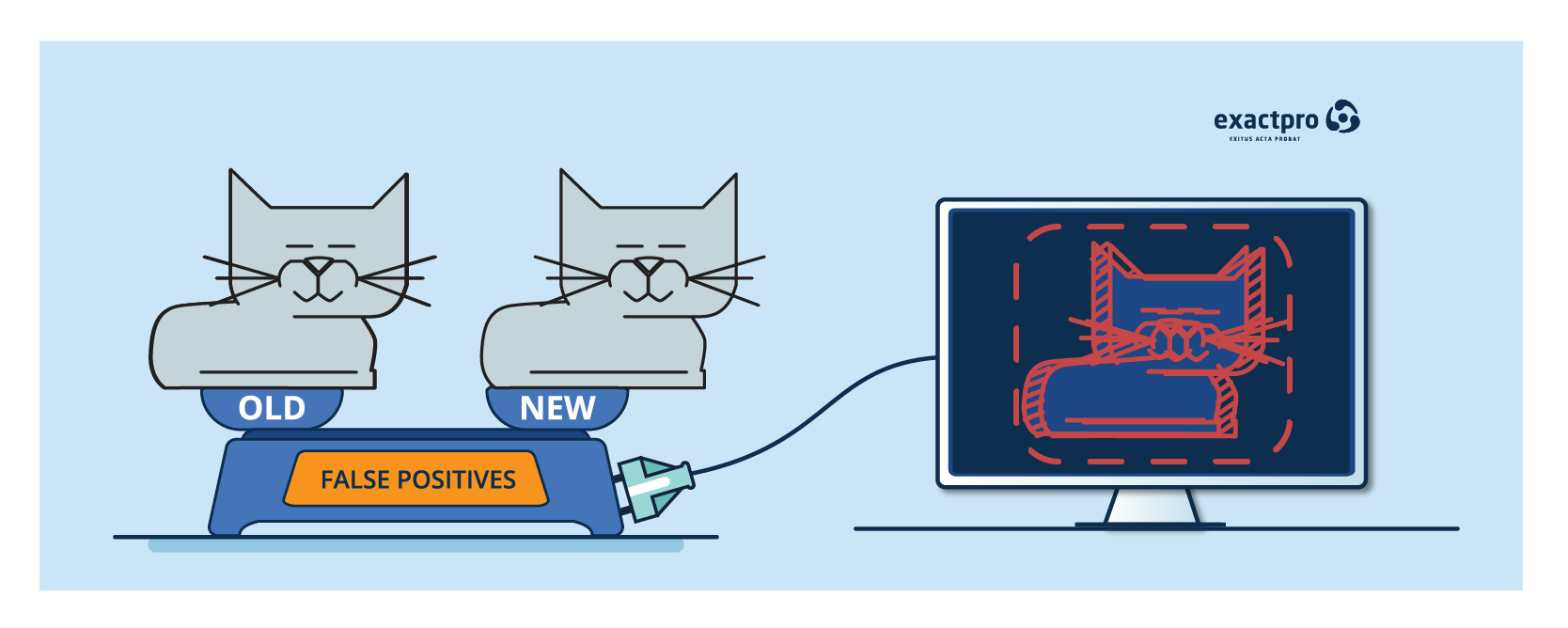

NO/YES – Extensive Functionality Changes and Minor Data Format Updates

When the system's business-as-usual behavior serves as the only test oracle, it is impossible to apply test automation to new functionality. Since data replay only allows us to check the existing functionality, verification for the new functionality is mostly manual and the testing scope is very limited.

The data replay approach also fails when the new functionality is outside the testing scope. There is no way to detect it or test it.

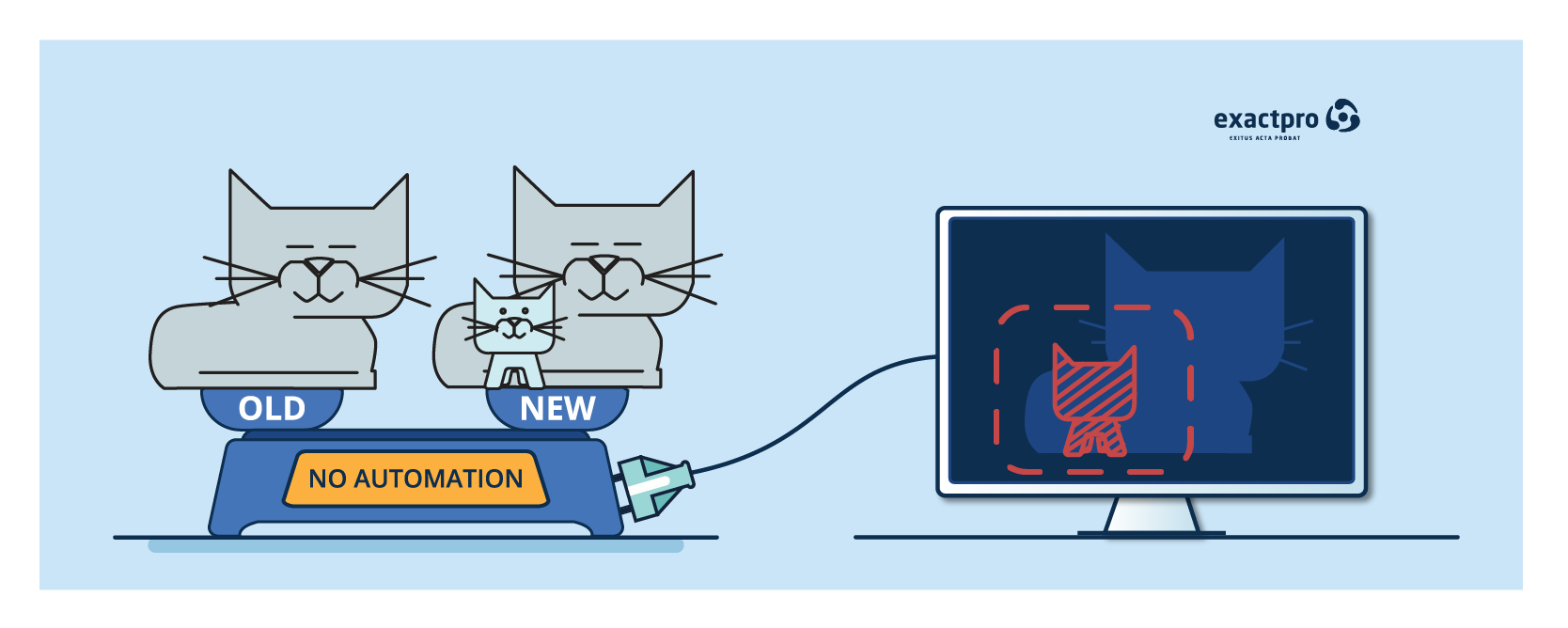

YES/YES – Extensive Functionality and Data Format Changes

Production replay alone is inefficient in supporting large-scale transformational projects. Without comprehensive models and deliberate test automation scenarios, most replays lead to garbage in, garbage out.

When a system undergoes a large-scale technology transformation, there are usually many changes in the input and output formats, the way the system processes the data, as well as the structure of the operational processes.

The Deliberate Practice of Software Testing

The Deliberate Practice of Software Testing based on modelling the system allows the creation of test libraries that serve as an executable specification for complex platforms. Instead of relying on a fixed data subset, we are constantly trying to widen the testing scope. Instead of confining the test scope to requirements, we have learned to see the system as a whole that evolves and changes over time, and not a static sum of its parts.

Software testing is relentless learning. Model-based testing is underpinned by the understanding that the best software testing instrument is the human brain. We need to create a mental model of the system – the theory of everything – implement it in code – Build Software to Test Software – and use it to produce lots of relevant test scenarios and their expected outcomes. With the deliberate testing approach, we can go through any possible data permutations.

In contrast, data replay relies solely on the existing data recordings. It is limited by the selected time window, and some rare events might not be included into the test run, since they do not necessarily occur every day.

Going back to the pixel analogy, we can say that data replay relates to model-based testing in the same way as bitmap graphics does to vector graphics. The latter can be easily manipulated: objects can be enlarged or made smaller without losing quality. It’s impossible to do the same with pixel images and stay happy with the result.

While there are many benefits of using deliberately generated synthetic data, there is always a probability that the model will not take into account some aspect of the system or a business flow present in production. To ensure proper test coverage, we use a set of techniques that includes process mining. Please refer to this WFE Focus article, this EXTENT conference video and other videos on the Exactpro Youtube channel containing more information on this and other techniques.

Exactpro focuses on software testing for exchanges, CCPs and financial technology vendors. We serve our clients in twenty countries on all six continents. Please contact us to learn more about how to overcome the limitations of operational day replay and release more reliable software into production faster.