Date:

Source/Publisher: Journal of Digital Banking

Abstract:

In the dynamic landscape of the banking sector, marked by increased operational complexity and regulatory scrutiny, the pursuit of innovation demands a strategic orientation. While offering advantages in cost, quality and speed, this strategic approach to innovation should mandate a cautious consideration of the risks inherent in the integration of emerging technologies. With the continuous advancement of artificial intelligence (AI), particularly generative AI (GenAI), as a method for emerging banking technologies, its wider adoption in the financial industry demands careful consideration of associated risk implications. This paper advocates for a software testing approach that delves into the complexities of banking technology platforms by leveraging generative algorithms to attain extensive test coverage and simultaneously employs rule-based analytics to refine the generated datasets, optimising coverage for faster execution and efficient resource utilisation. Such an approach is in line with a risk-averse innovation strategy, as it balances out the smart creativity.

Published initially and copyright reserved by Henry Stewart Publications LLP

Introduction

With more available data and growing computational capabilities, the innovation pace is rapidly increasing across different industries. With all its various facets (investment, corporate, retail, etc) and business use cases (transactional data processing, risk calculations, interaction with customers and many others), the banking sector holds a significant innovation potential. Extreme technological complexity coupled with regulatory obligations, however, impose additional requirements for innovative implementations in the banking sector, necessitating a delicate balance between technological advancements and risk evaluation. As the banking sector grapples with the challenges of digitalisation and modernisation, the adoption of emerging technologies requires a robust quality evaluation strategy.

From the standpoint of the software testing industry, banking technology stands out due to its inherent complexities in business flows and stringent regulatory compliance requirements. It is prominently cited as an area demanding profound subject matter expertise from testers, complementing their technical skills. According to a survey by Florea and Stray, ‘Out of the one-third of the [job] ads that ask for domain-specific competencies, most of the demand is around financial services and banking systems’.1

While the banks are still addressing the transformative challenges of digitising services and undertaking large-scale modernisation of obsolete architectures of their core systems, gaining a competitive advantage in adopting emerging technologies such as artificial intelligence (AI), blockchain and cloud can only be possible if the innovation effort goes hand in hand with rigorous quality evaluation of the underlying technology platforms. To achieve that, the software testing approach needs to be developed in such a way that it augments innovation efficiency by covering more system aspects while concurrently reducing the timeframes of test execution and results interpretation within testing cycles. With AI on the rise, the question translates into finding a way to enhance the software testing strategy for banking technology with novel techniques, ensuring the preservation or even enhancement of the confidence level of technology solutions.

Technology innovation in banking: challenges and opportunities

Digital transformation

As ‘many banks today continue to rely on outdated legacy technology that is costly to maintain or upgrade’,2 the need for a transformational shift is obvious. As industry players shape their modernisation strategies, it is crucial to prioritise the right thing. Although emerging technologies are often viewed ‘as a means to meet increased customer expectations’,3 their true potential is in adoption at the core level, extending beyond customer-facing or revenue-generating facets of the business, when the digital transformation effort results in a ‘holistic DT [digital transformation] framework with applied examples along the end-to-end digital maturity spectrum’.4

Such an objective proves challenging, as in many banks substantial changes are still required for core banking technology platforms. In its recent survey, Finastra highlights that, despite various banks launching digitisation initiatives, these efforts tend to be ad hoc in nature and often result in ‘digital islands [that] are not connected across the broader trade ecosystems’.5

With the adoption of new technology and the overhaul of technology stacks, however, unprecedented opportunities arise for both individual organisations and the banking industry at large. According to a recent research by McKinsey and Company, ‘Modernization of banks’ technology stacks can reduce operating costs by 20–30 per cent and halve time to market for new products’.6 This transformative potential underscores the significant benefits awaiting entities that embrace technological advancements and streamline their operational frameworks.

Moreover, the regulatory aspect and its technology implications serve as yet another catalyst for innovation. According to the Basel III explainer by EY, the implementation of the new Basel framework brings significant changes that will require banks to make ‘front-to-back investments in their technology infrastructure’.7 On a positive side, larger banks can leverage these requirements to modernise their capital infrastructure, which underscores the vital role of regulatory demands in stimulating technological innovation across the banking sector, transcending mere market dynamics.

AI and data strategy in banking

The intersection of AI and data strategy in the banking sector is a critical focal point, as evidenced by various research works. The McKinsey Global Institute posits a substantial potential for generative AI (GenAI) to enhance productivity in banking, estimating an increase of 2.8–4.7 per cent, which translates to a significant financial impact ranging from US$200bn to US$340bn in annual revenues.8 Moreover, potential benefits of adopting AI and advanced analytics in banking extend to internal IT operations, such as the deployment of process automation, platforms and ecosystems. According to McKinsey’s ‘Global Banking Annual Review 2023’, success in the realm of technology development and deployment is contingent upon adopting principles akin to those observed in technology companies: scaling product and service delivery, fostering a cloud-based platform-oriented architecture and enhancing capabilities to effectively address technology risks.9

The question of AI adoption is closely connected to organisational strategies around data. High-quality data is a crucial ingredient for AI models to attain performance and robustness thresholds. Conversely, the lack of data access creates organisational risks. On a strategic level, data should be central when an organisation aims to build a robust AI-powered decision layer, necessitating the development of an enterprise- wide roadmap for deploying advanced analytics and machine learning models.10 In addressing this imperative, yet another paper proposes a comprehensive framework for enterprise-scale AI strategy. Notably, a holistic approach is emphasised, wherein the availability of data architecture, data pipelines, application programming interfaces (APIs) and other essential components becomes paramount, providing the foundation ‘for building and deploying models at scale through standardised, repeatable processes’.11

Quality evaluation of complex banking technology platforms

Within the multifaceted landscape of banking technology, the evaluation of the quality of banking technology emerges as a pivotal aspect, aligning with the industry trend of applying innovative strategies to improve operational efficiency.

According to the Finastra’s survey mentioned earlier, GenAI-driven enhancement in IT operations ranks among the top three GenAI use cases in the financial services, as indicated by 33 per cent of survey respondents.12 Specifically, GenAI is cited as being applied to diverse tasks related to coding and software,13 a testament to the technology’s integration into the operational fabric of banking institutions and its effectiveness in practical applications.

In this context, applying GenAI to software testing strategy is only a natural step on the path towards a robust software testing approach, especially in the context of the challenges posed by the task of quality assessment performed in complex, non-deterministic systems, often with hybrid architectures infused with emerging technologies such as cloud computing, AI and/or distributed ledger technology.14

Provided that the banking technology domain is very diverse (often with multiple business streams implemented even within a single bank), a quality evaluation strategy should account for that diversity as well. As shown by a systematic overview of topics related to AI and operational research in banking (including, among others, risk management, banking regulations, customer-related studies and FinTech in the banking industry), each of them is characterised by a distinct set of techniques (with modelling and simulation among them),15 which necessitates a careful analysis and deliberate selection of applicable methods.

The most recent advancement in the AI space — GenAI — is generally cited as posing numerous risks (discriminatory/ biased and unreliable/incorrect outcomes; intellectual property (IP), data usage and privacy breaches; other risks: societal, employment, sustainability, along with the risk of doing nothing and missing the benefits of AI16). Understanding these risks is crucial, as is differentiation between Generative AI and Predictive AI, as there are key differences in the applications for which these technologies are suited. Predictive AI models are more suited to tasks requiring reasoning, pattern recognition, and analysis, while Generative AI is more suited to applications requiring fluency, with its strengths lying in content generation.17

Enhancing a quality assurance strategy with GenAI, especially in the risk-prone banking domain, should, thus, be preceded by the feasibility analysis of applying GenAI versus traditional analytical AI, which is cited as a crucial milestone on the strategic roadmap for AI upscaling within the banking sector.18 This deliberation becomes especially pertinent given the intricate nature of banking technology, as it shapes the methodological framework for effective quality assurance in the dynamic landscape of banking technology platforms.

A roadmap for improving the efficiency of testing in the banking sector

The case for different types of AI for different software testing tasks

In the pursuit of innovation, present-day banking institutions are committed to enhancing their technological capabilities while concurrently safeguarding the value of their technology platforms. This value is intricately gauged across three dimensions: quality, cost and speed. Recognising that software defects decrease software quality and subsequently diminish the value of software systems, the need for a robust software testing approach seems to be straightforward. The purpose of a sound quality assessment strategy, however, transcends mere defect elimination, aiming for improvements across the entire quality, cost and speed triad: efficient defect detection through augmented coverage, cost reduction via end-to-end automation and accelerated time-to-market facilitated by expedited test execution, results interpretation and reporting. To increase the value of a technology platform, one should, thus, aim for software quality assessment techniques that enable faster, cost-effective and more efficient testing processes.

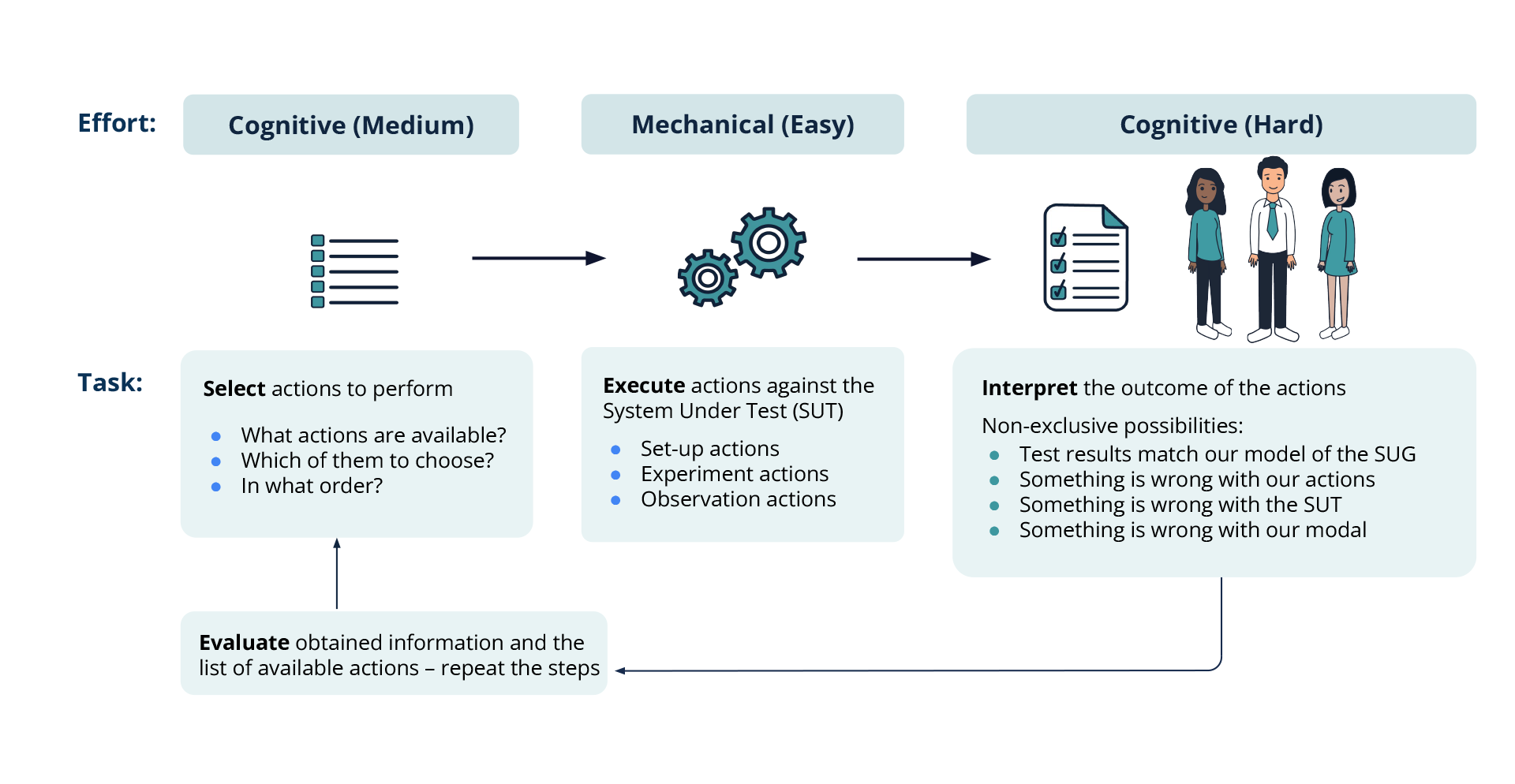

Software testing is a process of empirical technical exploration of the system under test (SUT) with the intent of finding and describing defects. The task of software testing consists of several steps: selecting a subset of possible actions to interact with a system, executing them and observing and interpreting the outcome.

With modern CI/CD (continuous integration and continuous delivery) pipelines, it is easy to minimise human involvement in the execution part. Automating higher-level cognitive functions, such as test idea generation or results evaluation is, of course, a less straightforward task. Figure 1 illustrates the software testing cycle from the perspective of the constituent tasks and associated cognitive effort.

With new technologies on the rise, the tasks involved in the testing pipeline can be automated (fully or partially, at least on the recommendations level) through the adoption of various AI techniques at different stages of the software testing cycle. To do that, one should tread carefully and differentiate between the techniques to be used at different stages. For example, one may use supervised learning for failure categorisation, reinforcement learning for root cause prediction or unsupervised learning for feature extraction (for more examples, see Sogeti’s State of AI Applied to Quality Engineering 2021–22 Report19). As for most recent approaches such as GenAI, it is important to understand their benefits and limitations in relation to a particular task. While it is hardly useful at the test execution phase, which is relatively easy to automate, the GenAI-assisted approach proves beneficial for the task of generating thousands of test scenarios and their data point permutations; conversely, for the task of test library optimisation, one might prefer symbolic AI techniques to use as rule-based discriminative mechanisms for selecting the most performing test scripts out of an extensive annotated test library.

Fig.1 – High-level architecture of an AI-based test automation framework

for an investment banking technology platform

Test automation framework for AI-driven test optimization

Different cognitive levels of tasks within the software testing life cycle dictate to separate the corresponding modules of the test automation framework. In this paper, we propose a framework that illustrates this principle by distributing the task between several components: a model, an injector tool and a test library.

A model is a digital twin of an SUT. It captures the logic of system process flows, equivalence classes of data, protocol rules and message structures. Its main purpose in the proposed test approach is twofold:

- Input generation: Based on simple template test scenarios, the model is used to generate full-fledged test scenarios that contain not only test actions and the sequence of steps but also the data values that will be part of the transactional messages formed at later stages.

- Expected output prediction: Based on the logic inside it, the model takes the input data and calculates the expected output, to be later compared with the actual output from the SUT.

An injector tool is a test harness that sends protocol-formatted transactional data into the SUT. The main purpose of the tool is to mimic life-cycle actions that are typical for:

- internal processes within a bigger banking technology infrastructure (those involving data flows from/to upstream and downstream systems), or

- external processes triggered by external entities and data feeds.

In addition to these, the requirements to the tool might include support for various protocols and APIs to establish connectivity to multiple gateways of the SUT, a possibility to configure the rate of message injection (controlling transactional load) and a capability of capturing system traffic and storing it in a unified format suitable for smart analytics.

A test library is a collection of automated test scripts used at different stages of the software testing life cycle. It is not a static construct, however, but rather a constantly evolving dataset containing data representations capturing test scenarios.

The main logic behind test scenarios reflects the functionality of the banking subsystem being tested. For example, if we evaluate the quality of a payment system, test scenarios should cover various parameters of a payment instruction, specific equivalence classes of the values within a Swift ISO 20022 message and the sequence of system actions around particular messages, checking not only message formation correctness but also ensuring that system actions in the messages sent to the SUT result in correct system state transitions.

The scenarios themselves should be organised as a three-tier collection:

- Source scripts, ie basic templates capturing the logic of a test scenario

- Input test scripts produced through automated generation when the generative algorithms within the model create numerous permutations of the basic template scenario

- Test execution scripts that are fed into the injection tool to be run against the SUT; they contain not only full sets of values to be sent to the system but also the details of a system output that is expected as the system’s response to a test scenario execution

The actual test approach stems from the characteristics of the framework components described and evolves through the following stages repeated iteratively to achieve better efficiency:

- Test generation: Hand-crafted scripts are fed into the model to generate numerous test scenario modifications by combing through possible parameter value permutations; for each of those scenarios, the model also enriches the data with protocol/API-related details and predicts expected outcomes, which are included in the test execution script (all the scripts, both source and generated ones, constitute the test library).

- Test execution: The injector tool takes the scripts generated by the model and runs them against the actual system; the tool also collects test execution traffic received from the system and stores it in a raw format. • Test output pre-processing: The test harness parses the traffic to flag invalid outputs and cuts the valid transactional data into separate fragments labelled in accordance with particular test runs.

- Test results interpretation and annotation: The test harness triggers the checks that validate and reconcile the actual output data against the expected output predicted by the model. At this stage, the framework generates test reports showing passed and failed actions/validation checks; additionally, test output data is labelled to account for various test parameters and their unique combinations (coverage points) triggered by particular test cases, resulting in an interpretation dataset. Presence of the code in the model responsible for assigning natural language annotations to some of the coverage points enables exposing the obtained interpretation dataset to large language models for further analysis.

- Coverage analysis and test library optimisation: Each test case is evaluated from the perspective of its model coverage weight, and a symbolic AI reduction algorithm is applied at this step to get rid of least-performing scenarios but keep the coverage. This step is crucial for test coverage evaluation and deep exploration of test results. The annotated data obtained at this stage can be reused for subsequent iteration of the test cycle to assist at the test generation stage. In this case, regression testing will be performed on an optimised subset of test scripts, which will significantly reduce test execution time while preserving test coverage (and not compromising on defect detection capabilities).

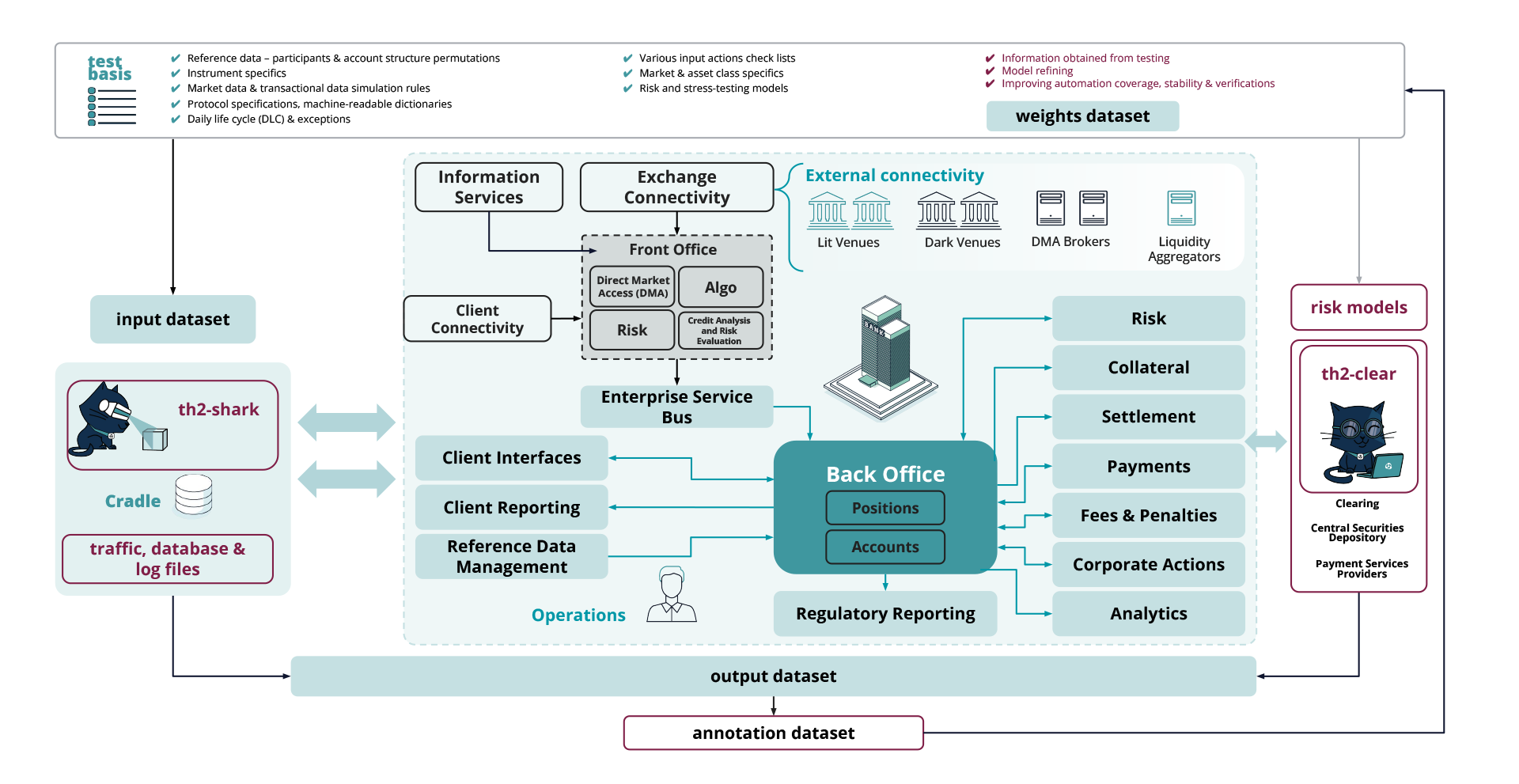

A sample implementation demonstrating the testing pipeline for investment banking technology is shown in Figure 2:

Fig.2 – High-level architecture of an AI-based test automation framework

for an investment banking technology platform

Applying AI testing roadmap in the industrial setting

Due to its capability to produce meaningful data permutations and support complex state transitions, the proposed multistep data-driven approach can be applied to a wide variety of use cases in the banking industry, addressing the domain complexity on many levels. Figure 2 illustrates a use case in investment banking: the AI testing cycle starts with permutations of data points extracted from the test basis (asset classes, reference and market data specifics, participants, account structure parameters, etc), which are fed into the SUT; subsequent system traffic data analysis is performed step by step to parse the data, extract the features, check the data against the model/rules and/ or reconcile data streams from different system components and refine the model for subsequent iterations.

The complexity stemming from domain-specific functionalities is addressed by AI-modelling, with the GenAI approach to test design allowing for better test coverage. At the same time, the technology- related challenge stemming from the need to quickly execute numerous multi-parameter tests represents the case for restrictive symbolic AI techniques used to optimise test suite, aiming for faster execution times and providing human analysts with test results interpretation support. In addition, balancing out generative algorithms with rule-based restriction mechanisms helps curate generated output, reducing the risks around data misrepresentation (eg invalid parameter combinations or unrealistic values).

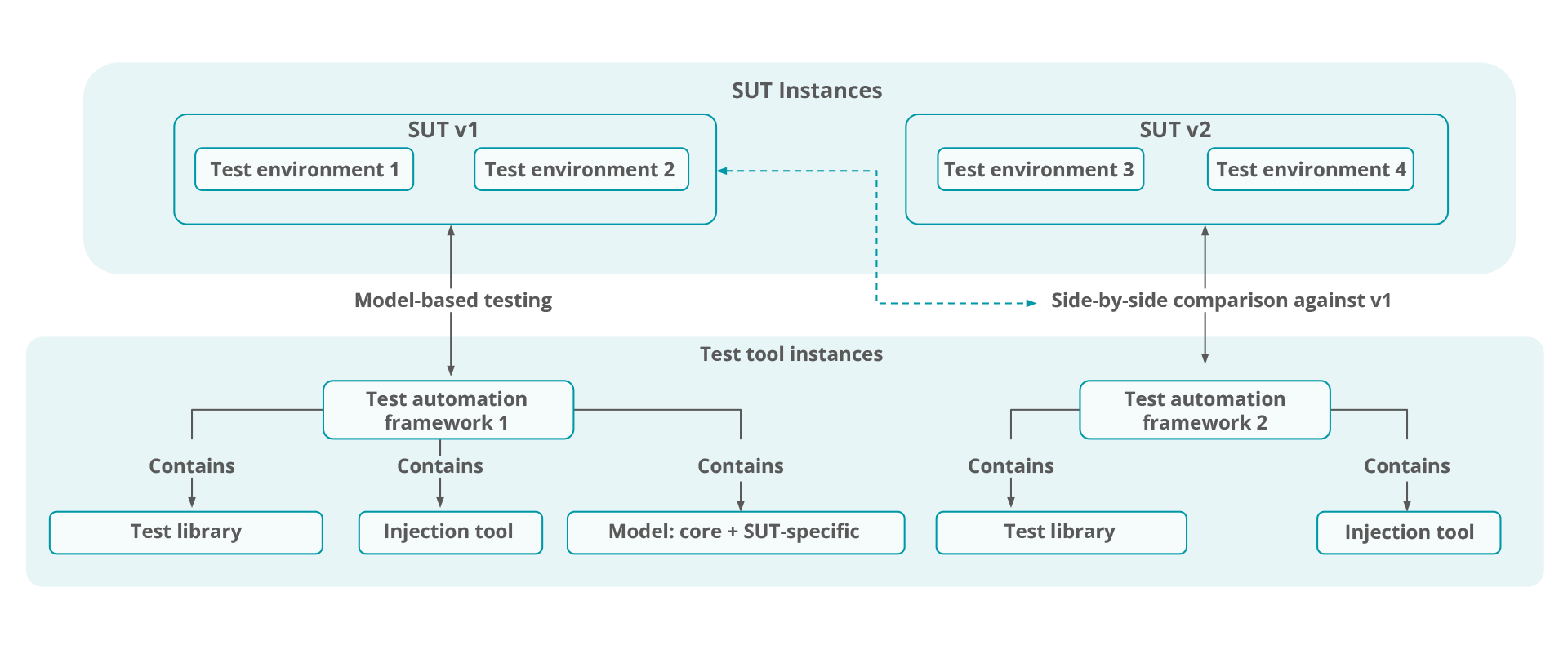

The aforementioned motivations correspond to the two modes in which the approach can be used, demonstrated by the sample architecture in Figure 3:

Fig. 3 Two modes of application of the proposed test automation approach in the industrial setting

- Test automation framework 1 is utilised to perform functional checks, based on the model-based testing approach for test generation and test results analysis (in this setup, the model itself is a crucial component).

- Test automation framework 2 can be used on an optimised subset of test cases to compare system outputs between different releases (SUT v1 versus SUT v2) rather than perform SUT<->model comparison. This mode is primarily applicable to smoke or regression testing tasks.

The approach has shown its effectiveness in real-world quality assessment projects, leaving the team with the following takeaways:

- Compared with hand-crafted automation scripts, test libraries that were generated and refined automatically provided fuller code coverage and equivalence classes diversity.

- Test optimisation supported by data annotations/traceability labels proved useful for refining regression test suites.

- Test automation, guided by the principle of separating test execution from the model and reconciliation checks, significantly reduces test execution time frames. Compared with an approach where a test harness awaits a response for each initiated transaction (resulting in end-to-end daily life-cycle testing taking up to several hours), the proposed data-driven architecture enables much faster test execution — processing thousands of transactions in just several seconds (precise benchmarking depends on various parameters, such as the hardware and software setup of the test environment, the complexity of the underlying business domain and the size of the test library). Furthermore, such an approach allows for modelling flexibility, as the model can be updated and reused for output prediction and reconciliation checks without the need to rerun the actual tests.

Conclusion

This paper presents an approach integrating GenAI techniques with traditional symbolic logic methods to address the challenges relevant to the present-day banking technology sector. The industry’s intricate demands, stemming from the functional complexity of banking software as well as from market-driven and regulation-triggered technological shifts, create a strong case for differentiating between methodologies used to evaluate the quality of underlying technology platforms. While the GenAI approach effectively addresses coverage challenges by accommodating diverse scenarios and parameter permutations, it is best used in tandem with traditional analytical AI techniques aiming for optimised coverage and resulting in faster yet coverage-effective test execution. By combining GenAI and traditional symbolic logic, the proposed approach offers a nuanced solution to ensure operational robustness and efficiency within the dynamic landscape of technological innovation (see also section 2.2.3 How Generative AI Complements Predictive AI of the UK Finance’s Report20).

Additionally, the paper demonstrates that the confluence of AI, data strategy and regulatory considerations presents a multifaceted landscape for innovation within the banking sector and, thus, should be taken into consideration when building a long-term quality assessment strategy. The integration of AI techniques into organisational IT processes (including the software testing pipeline) not only addresses quality-related challenges but also aligns with broader industry imperatives, including the modernisation of technology stacks, adherence to regulatory frameworks and pursuit of enhanced productivity and efficiency through advanced analytics. The study also positions AI-driven testing as a transformative force in shaping the future trajectory of banking technology, ensuring a delicate balance between innovation and operational robustness.

References

(1) Florea, R. and Stray, V. (2019) ‘The Skills That Employers Look for in Software Testers’, Software Quality Journal, Vol. 27, pp. 1449–1479. https://doi.org/10.1007/s11219-019-09462-5

(2) Cooperman, D. (2023) ‘How Banks Can Transform Trade Finance’.

(3) Finastra (2023) ‘Financial Services State of the Nation Survey 2023’, available at https://www.finastra.com/financial-services-state-nation-survey-2023 (accessed 16th March, 2024).

(4) Bhalla, R. and Osta, E. (2021) ‘Digital Transformation and the COVID-19 Challenge’, Journal of Digital Banking, Vol. 5, No. 4, pp. 291–304.

(5) Finastra, ref. 3 above.

(6) Bionducci, L., Botta, A., Bruno, P., Denecker, O., Gathinji, C., Jain, R., et al. (2023) On the Cusp of the Next Payments Era: Future Opportunities for Banks’, available at https://www.mckinsey.com/industries/financial-services/our-insights/the… (accessed 16th March, 2024).

(7) EY (2023) ‘Basel III Endgame. What You Need to Know’.

(8) Bionducci, Botta, Bruno, Denecker, Gathinji, Jain, et al, ref. 6 above.

(9) McKinsey and Company (2023) ‘Global Banking Annual Review 2023: The Great Banking Transition’, available at https://www.mckinsey.com/industries/financial-services/our-insights/glo… (accessed 16th March, 2024).

(10) Deakin, B. (2023) ‘Best Practices and Important Considerations for AI and Digital Transformation in an Economic Downturn’, Journal of Digital Banking, Vol. 8, No. 1, pp. 30–36.

(11) Agarwal, A., Singhal, C. and Thomas, R. (2021) ‘AI-Powered Decision Making for the Bank of the Future’, McKinsey & Company, available at https://www.mckinsey.com/industries/financial-services/our-insights/ai-… (accessed 16th March, 2024).

(12) Finastra, ref. 3 above.

(13) Kamalnath, V., Lerner, L., Moon, J., Sari, G., Sohoni, V. and Zhang, S. (2023) ‘Capturing the Full Value of Generative AI in Banking’, McKinsey & Company, available at https://www.mckinsey.com/industries/financial-services/our-insights/cap… (accessed 16th March, 2024).

(14) Treshcheva, E., Yavorsky, R. and Itkin, I. (2020) ‘Toward Reducing the Operational Risk of Emerging Technologies Adoption in Central Counterparties Through End-to-End Testing’, Journal of Financial Market Infrastructures, Vol. 8, No. 3.

(15) Doumpos, M., Zopounidis, C., Gounopoulos, D., Platanakis, E. and Zhang, W. (2023) ‘Operational Research and Artificial Intelligence Methods in Banking’, European Journal of Operational Research, Vol. 306, No. 1, pp. 1–16.

(16) UK Finance (2023) ‘The Impact of AI in Financial Services: Opportunities, Risks and Policy Consid- erations’, available at https://www.ukfinance.org.uk/policy-and-guidance/reports-and-publicatio… (accessed 16th March, 2024).

(17) Ibid.

(18) Kamalnath, Lerner, Moon, Sari, Sohoni, and Zhang, ref. 13 above.

(19) Sogeti (2022) ‘State of AI Applied to Quality Engi- neering 2021–22’, Demystifying Machine Learning for QE, available at https://www.sogeti.com/ai-for-qe/section-1-get-started/chapter-3/ (accessed 16th March, 2024).

(20) UK Finance, ref. 16 above.