Date:

The article was first published in the December 2024 issue of the World Federation of Exchanges Focus Magazine.

Maxim Nikiforov, Senior Project Manager, Commodity Exchanges, Exactpro

Financial systems regulators shun being overly prescriptive when formulating quality or coverage metrics for the multitude of financial institutions (FIs) under their supervision. As a result, FIs are left to apply their own judgement and tools to assess the fulfillment of their quality obligations.

A 100%-scale tends to be a common sign of “complete” test coverage, or the degree of test automation, in assessing the quality of complex financial technology platforms. But is it always clear what the “100%” implies and what requirements it actually satisfies? In this article, I aim to examine why this approach is fundamentally at odds with what comprehensive quality assessment is, and why it does not lead to representative test coverage.

The approach to quality assessment that includes “100%” goals is often based on a traceability matrix featuring quality requirements based on user stories. The International Software Testing Qualifications Board (ISTQB) Glossary defines a user story as “a high-level user or business requirement […] in the everyday or business language capturing what functionality a user needs and the reason behind this [...].” [1] The downside of this approach is that it causes the resulting quality metrics to be driven by the high-level static descriptions of user needs that do not necessarily communicate the entire context or outline the true depth of the test coverage measure of a given requirement.

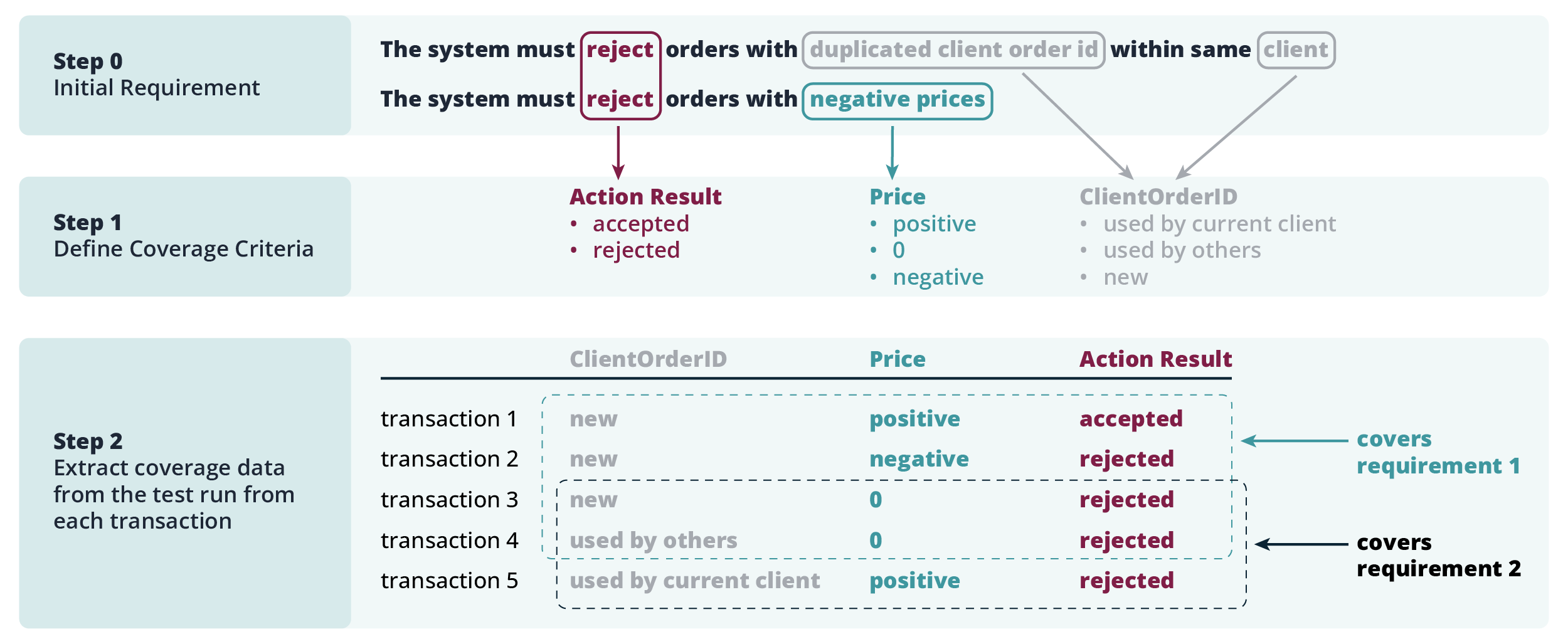

For example, if a user expects an order with a negative price to be rejected, then the user story will state that fact, and the resulting test will aim to confirm it. In contrast, what software testing should do is challenge this requirement and try to prove the opposite — check whether there are, in fact, conditions where a negative price is accepted, that would mean detecting an issue (a bug). Covering the “price-result” field combination in-depth would require checking as many value and parameter combinations as possible. This measure would ensure a truly comprehensive quality assessment, as opposed to that derived from directly following the initial requirement to the letter.

Examining the nature of outages, we can conclude that a failure of a complex system is rarely traced back to an isolated error. [2] It is often a combination of factors, e.g. a large amount of minor individual issues considered non-critical – the common context of which is being ignored – accumulating into a larger problem. That’s why it is important for the test framework to encompass all possible relevant conditions and parameters that could in any way affect system behaviour, as well as their combinations. It is the only way to account for the interconnectedness in a complex system. This approach implies putting system data first – operating on the transaction level, as opposed to the requirements level – and thinking beyond the perceived linearity between business requirements and the disparate sets of tests that are derived from them.

Due to its universality and algorithmic nature, a “data-first” approach helps mitigate common challenges of the “requirements-centred” approach. These include but are not limited to:

- ambiguity in requirements analysis;

- difficulty keeping the test library up to date and requirements consistent with each other;

- test coverage quality depending solely on the professional qualities of the QA personnel involved;

- the QA team having an obstructed view of the system, instead of seeing it as an environment of interconnected elements, components and processes and, as a result

- the test library only providing superficial coverage.

Admittedly, a data-driven practice is impossible to implement in the absence of a data-first mindset or a unified data repository and processing workflow. It has no place in siloed infrastructures or among undigitised processes. But with a digitisation culture coupled with powerful and now affordable CPUs, the ability to aggregate and process massive volumes of transaction data can truly transform a test approach.

A traceability matrix does play an important role as a reporting tool across the industry’s use cases. However, it should be taken with a grain of salt. It should be merely a way to visualise more complex coverage, to map the results of thousands of tests to an easy-to-view-and-interpret list of items. Surely, a matrix representation can facilitate parametric analyses and reporting, help assess coverage gaps, but in no way can it be a primary cause of proper coverage. In this misunderstood “chicken-and-egg” causality dilemma, the proper – comprehensive, and yet traceable – test coverage is not the product of a well-defined matrix, it is rather a result of a holistic data-driven testing practice, for which the coverage matrix is merely a convenient “user interface”.

Ways to achieve high-calibre objective data-driven quality assessment include the following principles:

- All data, including the traffic and execution results of the standard test library and all exploratory tests, should be aggregated in a unified format for further processing. All automation, including artificial intelligence (AI)-enabled automation, hinges on this principle.

- The test approach should comprise not just select, but all of the system, parameters defining system behaviour, as well as their permutations, in a way that is also a timely reflection of the system’s evolution throughout its lifecycle. The exact approach may vary depending on project goals. We consider system modelling to be most effective.

- The approach should be solidified in an integrated automated test library covering the entire relevant scope of parameters and processes – both explicitly known and implicit, instead of relying on formal specifications only.

- The more parameters – both static (e.g. order fields) and dynamic data (e.g. reference, instrument and user data, system and order book states, system actions such as order cancellation or amendment, and so on) – are factored into testing, the more unique conditions will be verified.

- The test framework should be able to pinpoint cases of non-determinism on two levels: shaping the verification checks and reporting. With fields potentially accepting an infinite number of values, a finite subset of values should be chosen using relevant techniques. Dynamic parameters need to be processed, so that the test results would be comparable between test runs.

- Despite efforts taken in steps 1-5 to move away from our inability to put a finite number on the scope and nature of checks included in test coverage, non-determinism and non-finiteness will still occur due to the sheer amount of variables interfacing. Based on priorities, the testing team can confirm with the client the most appropriate methods to effectively optimise the testing actions to achieve maximum feasible, traceable and consistent coverage.

- The optimised test subset can be assumed as a conditional 100% or not, depending on the client’s reporting preferences. However, both parties should understand the 100% as the best feasible attempt to create representative test coverage in a given set of project conditions.

An approach developed in accordance with these principles allows for pervasive automation, it is also universal: it is independent of testers’ skills and the quality of pre-defined specifications. It is rooted in the quality of the data made available for testing. Last, but not least, the results of complex testing can be successfully mapped back to the initial requirements (please see Fig. 1 below).

Fig. 1 A simplified representation of a data-driven approach to software testing

The purpose of software testing is to provide accurate, relevant, interpretable and affordable information, and not to merely confirm that the system works as expected. Thus, in building complex systems, it is crucial to invest in a comprehensive test coverage and evaluation framework able to fully reflect the complexity of the system being tested. It ensures holistic data-driven quality assessment and gives appropriate attention to each item on the compliance list, while not being limited by that set of proposed testable items.

Reach out to us via exactpro.com if you would like to continue the conversation on how independent AI-enabled software testing can help you Deliver Better Software, Faster.

References:

- The ISTQB® Glossary.

- Richard I. Cook, MD, Cognitive Technologies Laboratory, University of Chicago (2000). How Complex Systems Fail.