Date:

By Alexey Zverev, CEO and co-founder, Exactpro

Distributed ledger technology (DLT) innovation is an important trend shaping the financial services industry. It’s no surprise that under the pressure of ongoing overall digital transformation, many financial institutions are keen to adopt DLT in development of their platforms. However, when the innovation is introduced on a scale of a major capital market participant, its potential impact is likely to be shared by a larger financial services community. To facilitate the smooth transition towards the emerging technologies, the regulatory bodies require systemically important financial market infrastructures to ensure that while innovating, they also stay operationally resilient.

Operational resilience: regulatory perspective

Following last year’s disruptive events, the regulators continue to increase their focus on the financial sector’s operational resilience, i.e. the ability to prevent, adapt and respond to, recover and learn from operational disruption[1].

In the UK, the ‘Building operational resilience’ Policy Statement, produced by the FCA, PRA and Bank of England in March 2021[2], introduces new rules to go into effect early next year. In the preceding discussion paper dated July 2018[1], the regulatory bodies outline the main challenges to the operational resilience and provide guidance on identifying important business services and assessing the impact of potential disruptions.

The European regulatory framework is consistent with its UK counterpart in terms of these challenges and sees emerging technologies along with growing dependency on data as generating a need for stronger operational resilience[3].

As outlined in the EC’s regulation on a pilot regime for FMIs based on DLT, the operators of market infrastructures are expected to “ensure that the overall IT and cyber arrangements related to the use of their DLT are proportionate to the nature, scale and complexity of their business”[4]. To fully comply with this requirement, regulated entities need to establish adequate software testing procedures for their core platforms. But what sort of testing would be considered regulatory-grade?

Achieving regulatory standard in software testing

Regulatory-grade testing is software testing that helps the firms ensure “continued transparency, availability, reliability and security of their services and activities, including the reliability of smart contracts used on the DLT”[4]. It also provides the information, sufficient for making robust decisions. In achieving this, it is crucial that testing activities generate regulatory-grade data, the data that satisfies the requirements of integrity, security and confidentiality, availability, and accessibility.

Speaking broadly, in order to meet regulatory requirements, you need to prove the ability to manage your application providing fair, consistent and uninterrupted transaction management service for all participants. The key for this ability is extensive knowledge of the system that you are going to manage. The only possible way of obtaining such knowledge is via experiments and observation, which is basically what software testing is. For those trying to develop and maintain complex financial systems, insufficient testing leads to inevitable failure.

Understanding the challenges

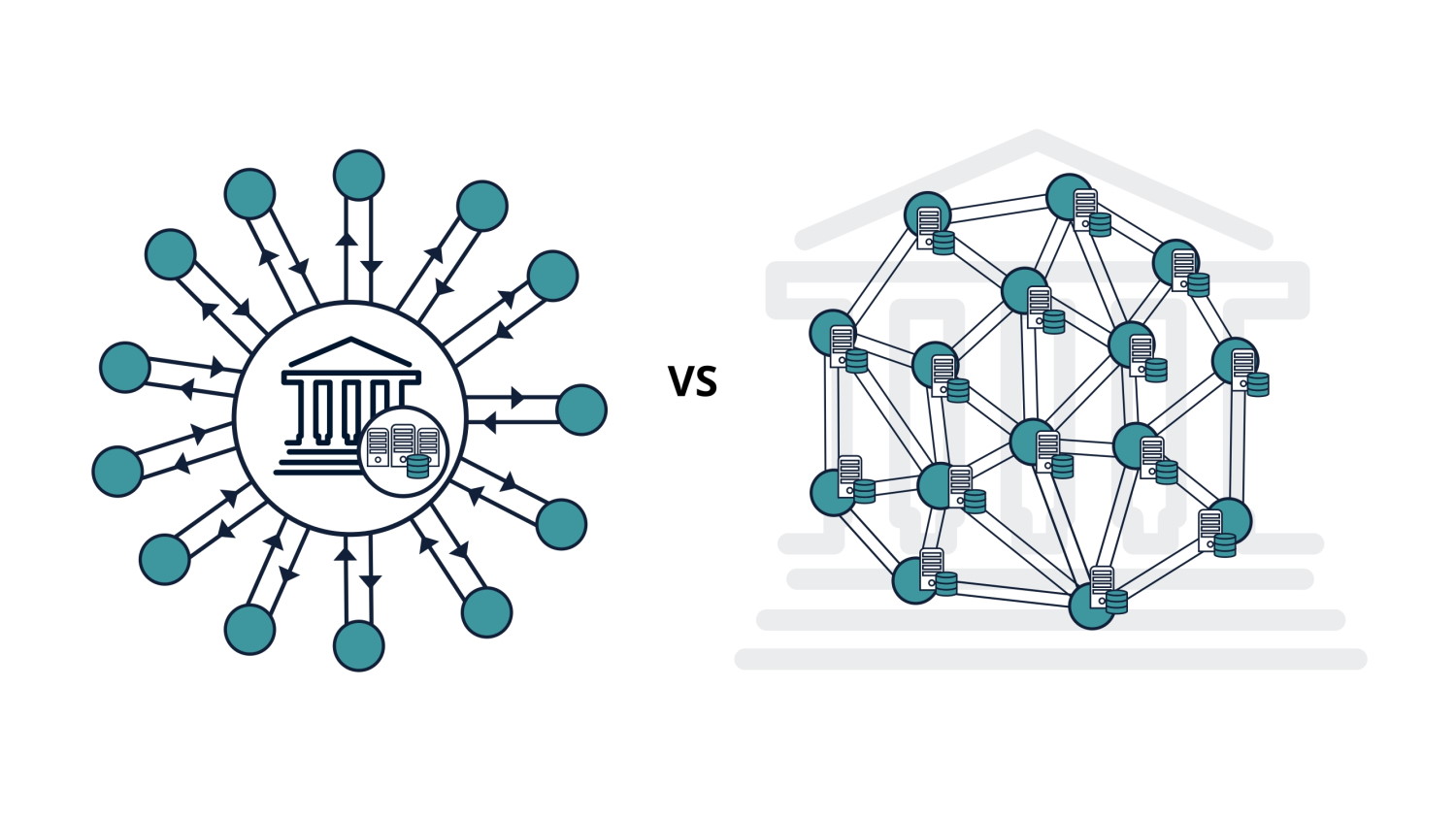

However, testing the systems based on distributed blockchains is not a trivial task. For comparison, in electronic trading, all transactions in modern stock exchanges are processed by matching, clearing and settlement engines hosted in fully controlled data centers managed by corresponding organizations. In contrast to that, in distributed blockchains, transactions are processed by a distributed network of nodes hosted and managed by the participants. This introduces a number of complications for software testing, such as:

- impossibility to simulate adequate transactions without a production blockchain;

- non-deterministic response due to complexity of the state of the network caused by distributed processing;

- diversity of APIs, platforms, and application versions within the network;

- complexity of smart contract transactions involving multiple participants simultaneously;

- poor integration with legacy systems outside of the network;

- absence of a central processing point allowing you to deduce and drill down the chain of events that led to particular outcomes.

Addressing the complexity: Processes, Platforms, People

Key ingredients to accommodate software testing of a regulatory grade are Processes, Platforms and People.

Setting up software testing process

At Exactpro, we understand the software testing process as an empirical technical investigation conducted to provide stakeholders with the regulatory-grade information about the quality of the product or service under test.

The key characteristics of a good testing process are:

- Focus on observation: aiming to discover new information rather than confirming the assumptions;

- Testing is relentless learning: meaningful testing demands sufficient time investment;

- Early testing: testing activities should start as early as possible during the requirement definition stage and continue throughout the whole duration of the project;

- Independent perspective: testing team has an ability to form objective judgements and a voice to advocate for proper governance;

- Test automation: efficient testing is impossible without employing test tools - not for the sake of automation per se but rather to augment testing capabilities of highly qualified software testing engineers.

Regulatory-grade testing requires a significant investment of time of highly skilled resources. It is important that this talent is providing an independent view: they are not the same people who built the platform in the first place, e.g. in-house developers or a software vendor.

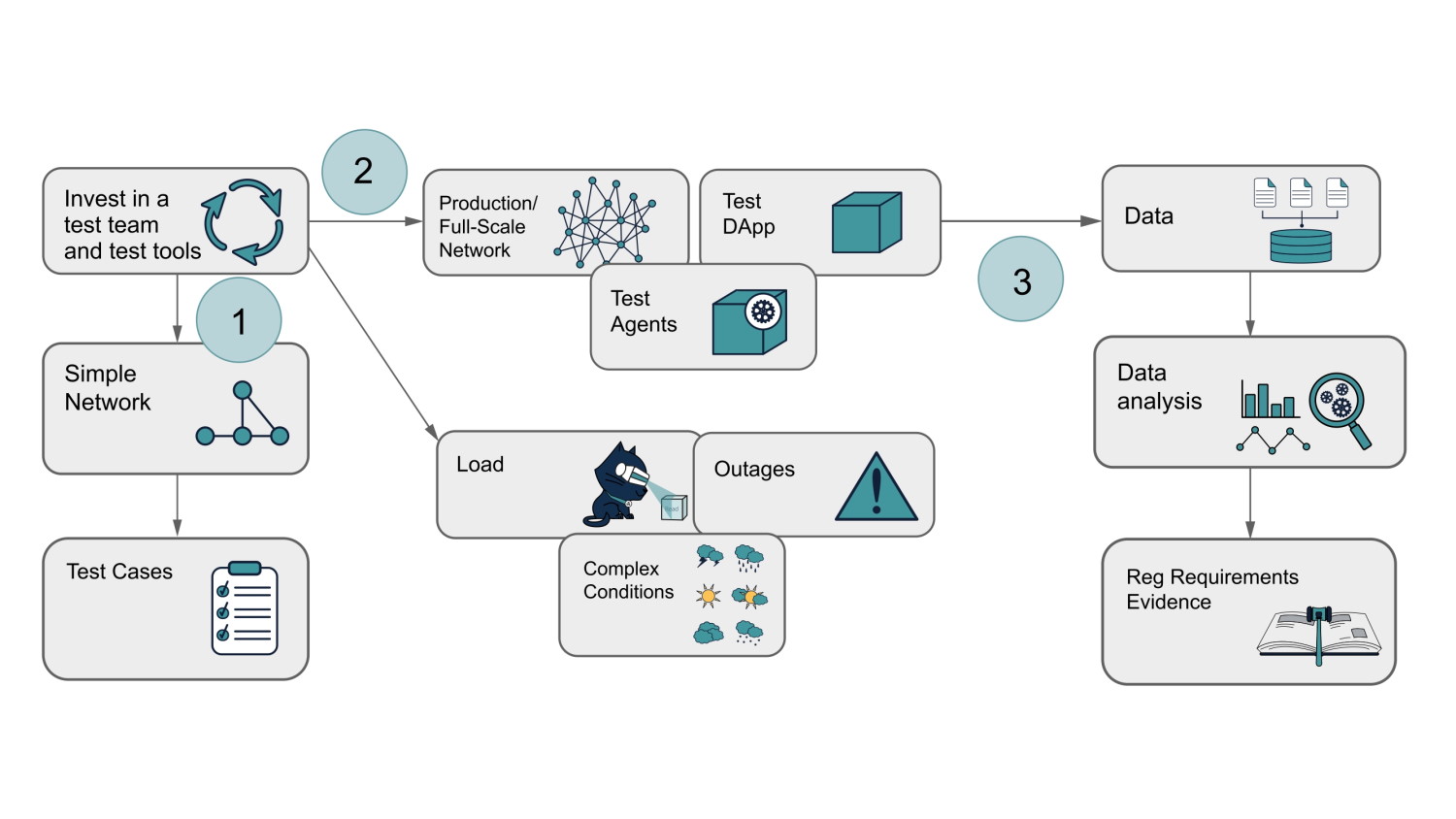

1 - As the first step, we must ensure that basic scenarios of the core functionality pass the tests in a semi-isolated controlled environment.

2 - The next step is end-to-end testing in a production or production-like network, consisting of an environment, a test version of your application distributed across this environment, and Test Agents - pieces of software that will facilitate testing and collect important information.

Using this toolkit will enable you to

- load the system with appropriate flow of transactions covering important scenarios;

- simulate outages in the network and test application;

- simulate complex conditions your application is required to sustain.

3 - Finally, from all these activities you will need to collect data containing sufficient information to understand the outcome of the tests. This data must be processed and analyzed to extract the knowledge about the system behavior and draw insights needed to provide uninterrupted service.

Building regulatory-grade platforms

The processes should be supported by the platforms. To provide the regulatory-grade testing capabilities, a test automation framework should rely on the latest technology stack and support testing at the confluence of functional and non-functional approaches.

The extreme complexity of the task implies a set of requirements for the testing framework that are crucial to ensuring an appropriate level of quality assessment.

First of all, a technologically advanced testing tool fit for the task of testing distributed apps on a regulatory grade requires the ability to invoke tests through a variety of platforms and APIs along with the potential to run many different tests many times. Another important requirement is the possibility to simulate important conditions by deploying the test code into the network. To enable its users to draw insights from system behavior, the testing harness should be able to process high volumes of data. On top of that, the ability to invoke and analyze the outcome of chaotic scenarios as well as to deal with non-deterministic responses entails the requirement of a tool being enhanced with a strong analytics module.

To meet the complexity level of the present-day distributed non-deterministic platforms, we developed a software testing framework satisfying these requirements.

th2[5] is an open source toolkit providing end-to-end functional and non-functional test automation for complex distributed transaction processing systems. Built as a cloud native Kubernetes-driven solution, it aims to help regulated entities stay compliant and resilient to disruption, while focusing on innovation and having the freedom to embrace emerging technologies:

- it is a multi-platform framework with a powerful API, enabling intelligent interaction with many widely adopted network protocols as well as API, UI, DLT and cloud endpoints;

- its microservices architecture allows building complex test instruments and execute sophisticated test algorithms;

- it supports GitOps paradigm, enabling CI/CD pipeline integration;

- it is designed to perform autonomous test execution;

- ready for implementation of AI-driven test libraries for machine learning and advanced data analytics.

Though this set of test automation capabilities may seem comprehensive all by itself, it is still not enough to establish a robust software testing approach of a regulatory scale without highly-skilled professionals to make processes and platforms work together.

Supporting processes and platforms with people

If asked to outline core qualities of the people who are apt for the task of testing of complex distributed systems, the following key characteristics can be suggested:

- a software tester’s mindset - to actively pursue exploring of the system they test;

- software developer’s skills - to be able to create code for tests that adequately cover complex behavior of the systems under test;

- deep understanding of business logic and technology behind the platform being tested.

Hard and soft competencies that are needed to operate technologically advanced platforms and implement the required processes are outlined under the umbrella of the Zero Outage Industry Standard (ZOIS)[6], an industry association developing best practices to ensure the highest quality of IT platforms.

Conclusion

The regulators expect the financial institutions operating complex distributed platforms to be able to maintain high availability and resilience to disruption, even in a chaotic environment. This can only be achieved by intelligent end-to-end testing of a distributed application in both business-as-usual and disruption scenarios under production-like conditions and in a full-scale network. That requires an investment of the time of highly technical resources and advanced test tools.

With DLT projects being implemented on a greater scale, the financial services industry is rapidly transforming adopting new technology and, then, regulatory standards. In such a context, it is very important to establish the practice of DLT innovation that is supported by extensive regulatory-grade quality assessment.

REFERENCES

[1] Building the UK financial sector’s operational resilience: Discussion paper | DP1/18 by the BoE, PRA, and FCA. July 2018.

[3] Operational resilience: Impact tolerances for important business services: Policy Statement | PS6/21 by the BoE, PRA, and FCA. March 2021.

[3] Proposal for a Regulation of the European Parliament and of the Council on digital operational resilience for the financial sector - COM(2020)595.

[4] Proposal for a regulation of the European Parliament and of the Council on a pilot regime for market infrastructures based on distributed ledger technology - COM(2020)594.

[5] th2 - an open source test automation framework: https://github.com/th2-net.

[6] Zero Outage Industry Standard Association: https://zero-outage.com/.