Date:

1. Introduction

The financial industry has shown a strong interest in AI over the recent years. Financial institutions are implementing AI/ML on such a large scale that it stimulated cross-industry collaborative efforts aimed at delving deeper into the area.

The interest to AI and the risks around it is shared by the international financial organisations, governmental structures and regulatory bodies.

An example of an industry-wide look at the problem of AI in financial services is a number of reports and press releases published by the Financial Stability Board, who is “conducting ongoing monitoring of AI and machine learning in financial services” [1, 2, 3, 4]. An interest to AI and ML spreads outside the financial services industry - to name more general international initiatives, there is a High-Level Expert Group on Artificial Intelligence established by the European Commission, with 52 experts who have already issued recommendations on how AI should be implemented across different industries [5, 6].

From the regulatory perspective, in October 2019, the FCA and the Bank of England published a joint report on the adoption of Machine Learning techniques across the financial services industry in the UK [7]. The report gives a good overview of ML-related use cases which are the most common among the respondents taking part in the survey. It also offers some predictions on the ML implementations in the future.

The survey has shown that over ⅔ of financial institutions use ML techniques in their business practices and, overall, ML has already passed the initial development stage and is entering a more mature phase. On average, the firms use ML in two business areas and this number is expected to more than double over the next three years.

The current areas of implementation of AI include, among others, market surveillance systems, algo trading platforms, conversational assistants, pricing calculators, machine-readable news, insurance claims.

2. Testing AI Systems: the Fundamentals

With AI reaching the real-life implementations stage, the number of educational programs offering training in the knowledge domain is quite impressive - nowadays it is not a problem to enroll in AI-related courses with universities as well as with online educational platforms. Quite recently, a new certification exam covering AI from the testing perspective was launched by the Alliance of Qualification. The certification is accompanied by the A4Q AI and Software Testing Foundation Syllabus [8]:

“AI System Quality Characteristics ISO/IEC 25010 establishes characteristics of product quality, including the correctness of functionality and the testability and usability of the system, among many others. While it does not yet cater for artificial intelligence, it can be considered that AI systems have several new and unique quality characteristics, which are:

- Ability to learn: The capacity of the system to learn from use for the system itself, or data and events it is exposed to.

- Ability to generalize: The ability of the system to apply to different and previously unseen scenarios.

- Trustworthiness: The degree to which the system is trusted by stakeholders, for example a health diagnostic.”

According to it, in addition to traditional quality characteristics of IT systems, AI-based systems are required to be able to learn, to demonstrate an ability to generalize and to be trustworthy.

As for the ability to learn, this area is quite well studied: there are lots of sources covering the performance of learning algorithms, the first to name here is the book by Andrew Ng published on the Deeplearning.ai portal [9]. In this book, the author explores learning algorithm performance dependency on the size of the underlying neural network as well as on the data used. When it comes to the latter, an algorithm’s ability to learn largely depends on the amount of data, and, what is even more important, on the design of training, development and test datasets in terms of their proportion, data distribution, proximity to the expected production data, etc.

Trustworthiness of AI-based systems is covered not only in academic books and papers but also in sources dealing with real-life application of AI - expert groups established as part of governmental and international cross-industry organisations.

For example, the question of trustworthiness is discussed among other AI Principles proposed by the US Department of Defence’s Innovation Board as part of its AI Principles Project. These principles were approved during the DIB’s quarterly public meeting on October 31, 2019.

Another example of this joint effort is the creation of the Veritas Project driven by the Monetary Authority of Singapore and supported by the leading global financial institutions. The project was announced on November 13, 2019, and it is aimed at promoting the responsible adoption of artificial intelligence and data analytics across the financial industry. The principles developed by the organisation in 2018 include fairness, ethics, accountability, and transparency (FEAT) [10].

The Ethics Guidelines for Trustworthy Artificial Intelligence, presented by the EC’s High-Level Expert Group on AI in April, 2019, set out the three components of trustworthiness: “(1) it should be lawful, complying with all applicable laws and regulations (2) it should be ethical, ensuring adherence to ethical principles and values and (3) it should be robust, both from a technical and social perspective since, even with good intentions, AI systems can cause unintentional harm” [5]. These components are accompanied by 7 key requirements for the AI to be considered trustworthy:

- human agency and oversight,

- technical robustness and safety,

- privacy and data governance,

- transparency,

- diversity, non-discrimination and fairness,

- societal and environmental well-being,

- accountability [5, p.2].

An interesting set of actual “important techniques” which can be used “to persuade people to trust an algorithm” is shared by Andrew Ng in the December 4, 2019 issue of The Batch blog: explainability, testing, boundary conditions, gradual rollout, auditing, monitoring and alarm [11].

The ability to generalize is understood as the ability of the system to apply to different and previously unseen scenarios. It is indeed an important characteristic for an AI-based system… as for any other non-deterministic system, such as complex platforms underpinning financial market infrastructures.

When this characteristic is discussed in relation to AI systems, it is usually associated with the parameter of variance, which refers to a model’s sensitivity to specific sets of training data [8, p. 28]. However, traditional (non-AI-based) complex financial platforms after being tested on a relatively small set of expected data, experience failures when prompted with new data. Thus, the test approach focusing on the ability to generalize is equally applicable to both traditional and AI systems.

What are the cognitive patterns that can affect the testing of non-deterministic systems?

3. Cognitive Bias Affecting Testing of Non-Deterministic Systems

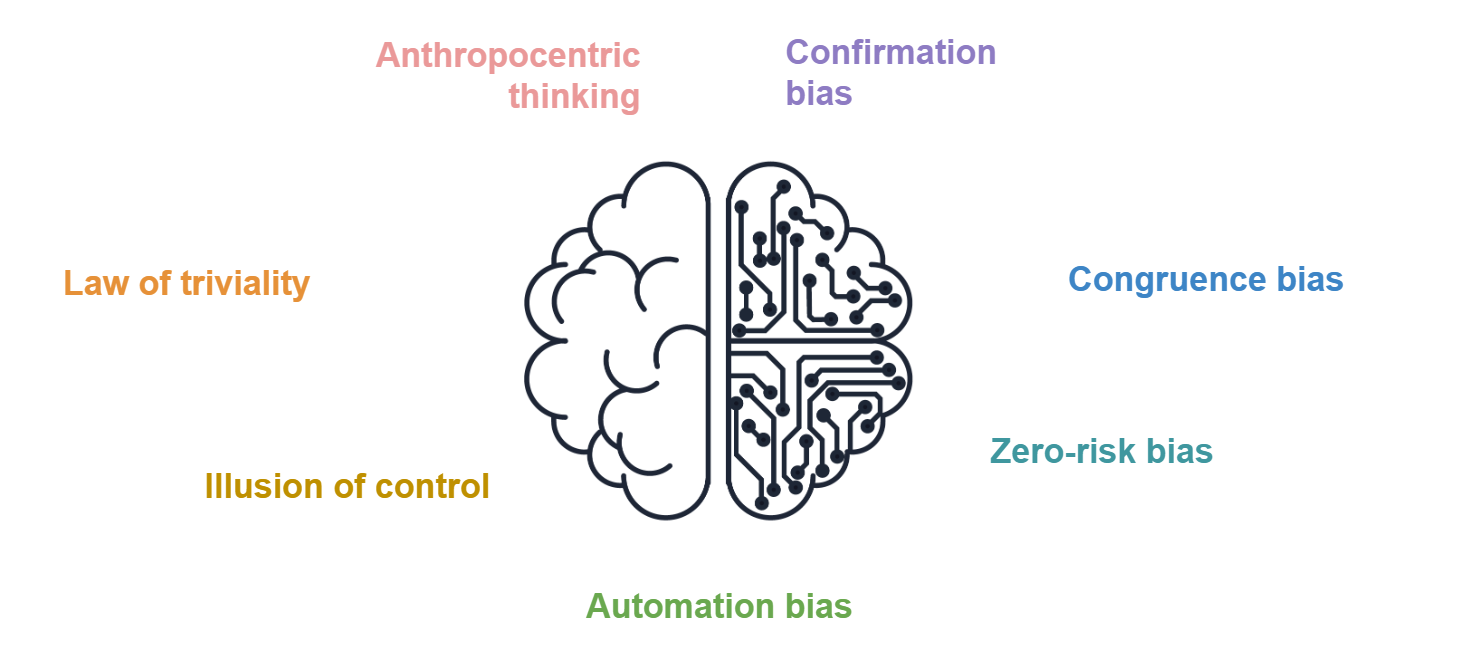

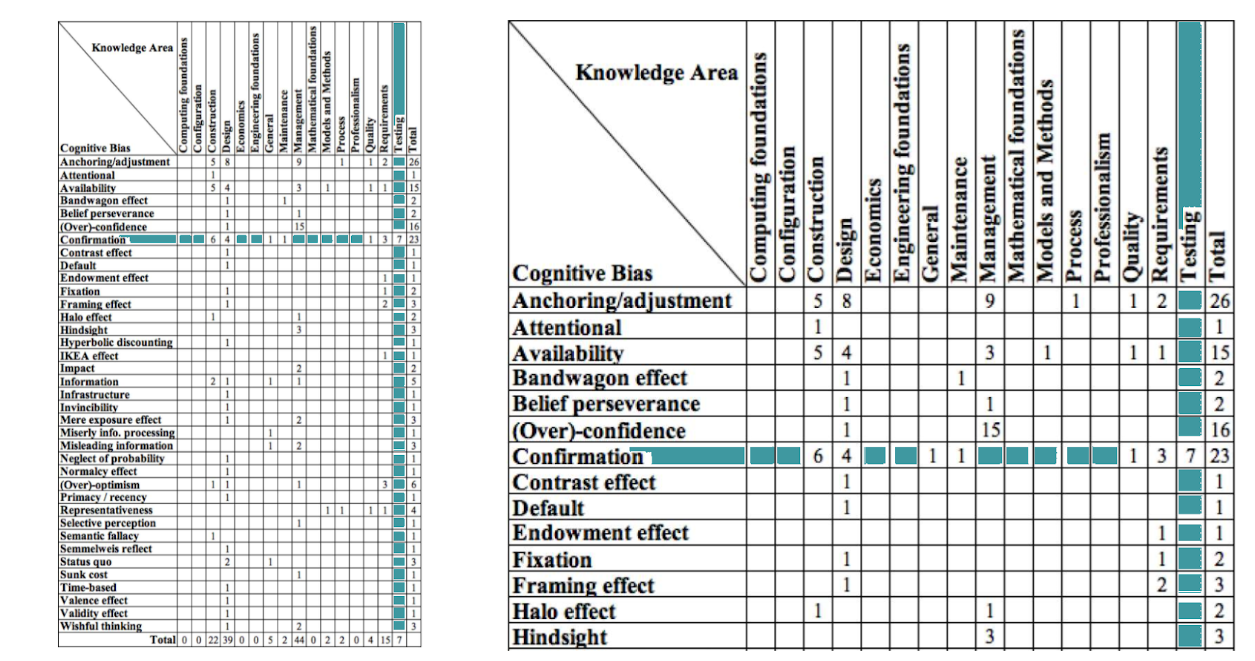

According to psychologists, there are a number of biases affecting our judgements both in work and in everyday life. Software testing is not an exception. Here’s a list of several cognitive biases that can be associated with testing different types of AI-based or, in a broader sense, complex non-deterministic systems:

These biases are quite common for software engineering in general, but some types of the systems under test tend to be more susceptible to certain ones of them.

The examples below demonstrate an attempt to align the most common areas of application of AI to the financial services industry with the cognitive biases commonly presenting themselves during quality assurance activities around these areas.

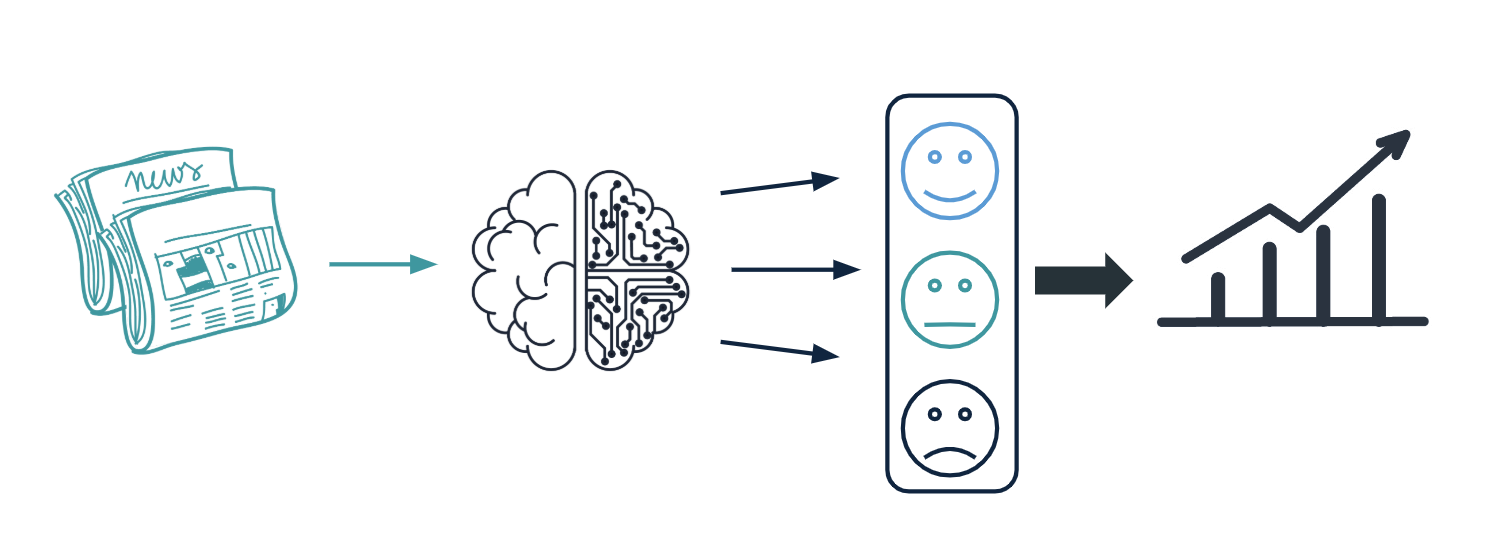

4. Machine-readable News

Machine-readable news (MRN) is the data in an easy-to-consume format which enables AI analyzers to define the sentiments behind the news pieces and extract information on new trading opportunities, market inefficiencies, event risks.

Testing this type of AI systems would mean assessment of performance of an MRN analyzing algorithm, which depends to a large extent on the underlying textual data. The media pieces tend to be full of fixed views and opinions, and the sentiments generally attributed to a particular topic can be different from the actual ones. Hence, the main problem of news media today is the confirmation bias, when a person interprets information in a way that affirms their prior beliefs or hypotheses.

When testing AI-based systems, it is important to avoid confirmation bias which is, according to research papers, very common in testing as well as in software engineering in general [12, 13].

One of the most illustrative examples of the confirmation bias in software testing is the BDD approach which is focused on checking the lines in feature files rather than exploring all the possible paths of interaction with a system under test.

5. Conversational Assistants (Chatbots)

Conversational AI systems are a very common part of banking, insurance, and portfolio management services. Just a mere enumeration of the virtual assistants’ names - Erica (Bank of America), Debbie (Deutsche Bank), Amy (HSBC), Amelia (AllState) - allows to suggest that the most common bias associated with testing chatbots would be an anthropocentric bias.

We tend to perceive objects or processes around us by analogy to humans. A vivid example is provided by the story published by the Washington Post about a colonel who calls off the experiment with a mine-defusing robot, being unable to watch “the burned, scarred and crippled machine drag itself forward on its last leg” and stating the test as being “inhumane”.

Another example demonstrating this psychological tendency is provided by the research paper [14] and a corresponding TED talk by Kate Darling about compassion towards toy dinosaurs.

From software testing perspective, this bias can obscure the understanding that what is evident for a human being can be a challenge for an AI-system.

With conversational agents, the most typical example is anaphoric relations: while for a human it is easy to say what a pronoun refers to, for machine it would be easier to look at the closest word in context; or, a conversational assistant can differently react to the same phrase printed with one question mark vs. with two of them, and to a correctly spelled phrase vs. to one with a misspelling.

Thus, when testing chatbots, one should rethink the equivalence classes and significantly broaden the datasets containing user inputs.

6. Algo Trading

Another good example of an AI-based system is an adaptive smart order router, or an algo-trading platform. In a high volume, low latency environment it is often impossible to predict the exact outcome of a test scenario.

The cognitive pattern which is likely to affect the QA activities around this type of systems is so-called congruence bias.

Congruence bias occurs due to overreliance on direct testing of a given hypothesis while neglecting indirect testing.

Congruence bias decreases the adoption of the advanced software testing techniques, such as passive testing and post-transactional verification.

These methods are better suited for testing AI-based systems, comparing to more common active testing approaches (the examples of research on the methods and how they can be applied in practice can be found in [15], [16], [17]).

7. Pricing Calculator

Pricing calculators are an example of systems which were using machine learning, big data and non-deterministic methods long before they were adopted by the industry on a large scale.

However, in some cases, like in mortgage-based structured products, it may seem that the validation priorities were misplaced.

There is a cognitive bias called the Parkinson’s Law of Triviality, also known as the ‘bike-shed effect’, - a tendency to give disproportionate weight to trivial issues.

“Parkinson provides the example of a fictional committee whose job was to approve the plans for a nuclear power plant spending the majority of their time on discussions about relatively minor but easy-to-grasp issues, such as what materials to use for the staff’s bike shed, while neglecting the proposed design of the plant itself, which is far more important and a far more difficult and complex task” [18].

This behavior is very common when testing sophisticated systems.

Another bias worth mentioning here is well studied in safety-critical systems - the Automation Bias. It affects not just airplane cockpits, but also test automation as the maturity of the software testing tools increases.

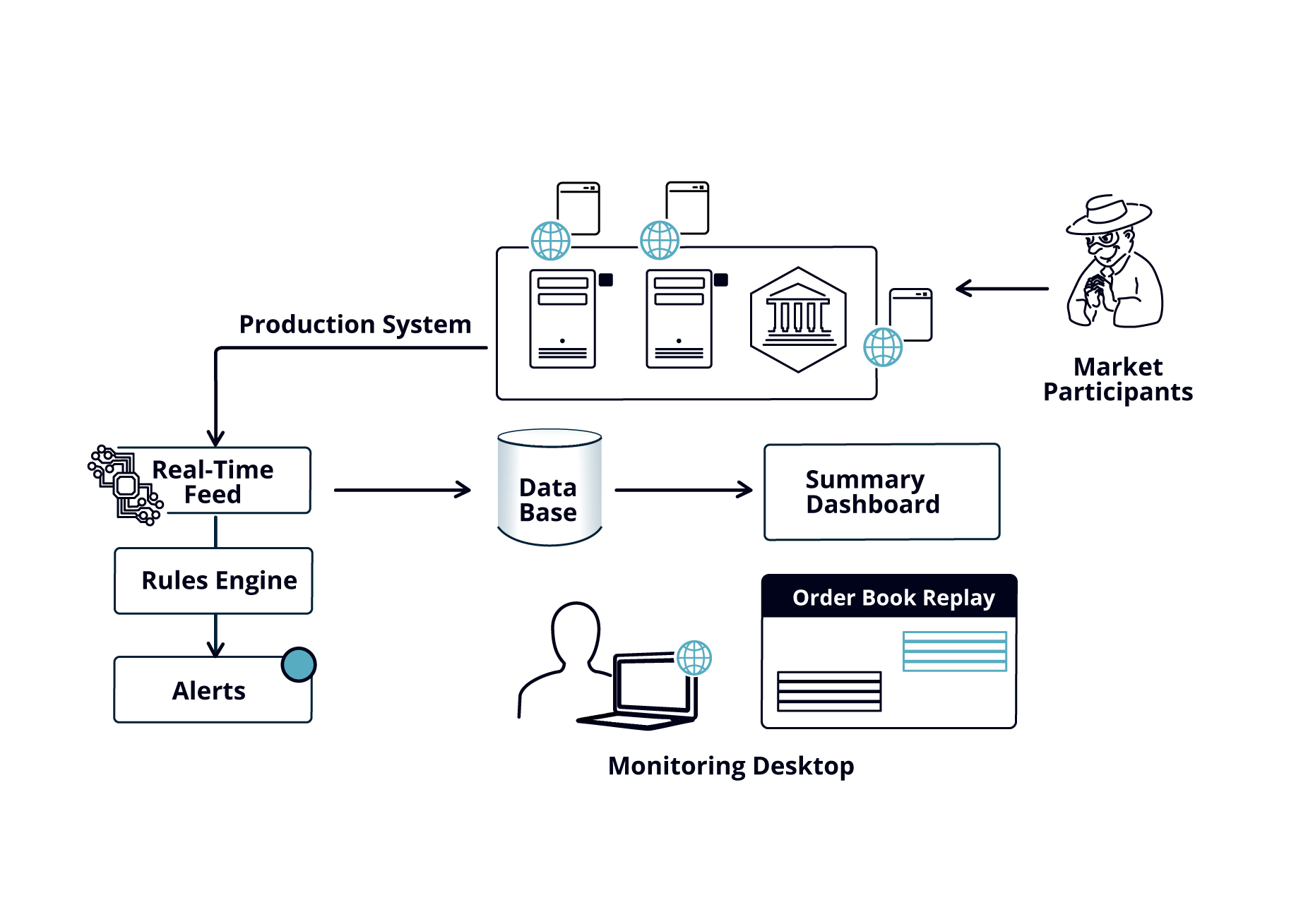

8. Fraud Detection and Market Surveillance

Proper testing is important for monitoring systems. One of the approaches to overcome the automation bias is software testing tools diversity. When testing market surveillance systems, it is extremely important not to rely on a single tool. Instead, one should consider using instruments based on different technologies and approaches – script-based tools, participant simulators, built-in custom alerts, etc.

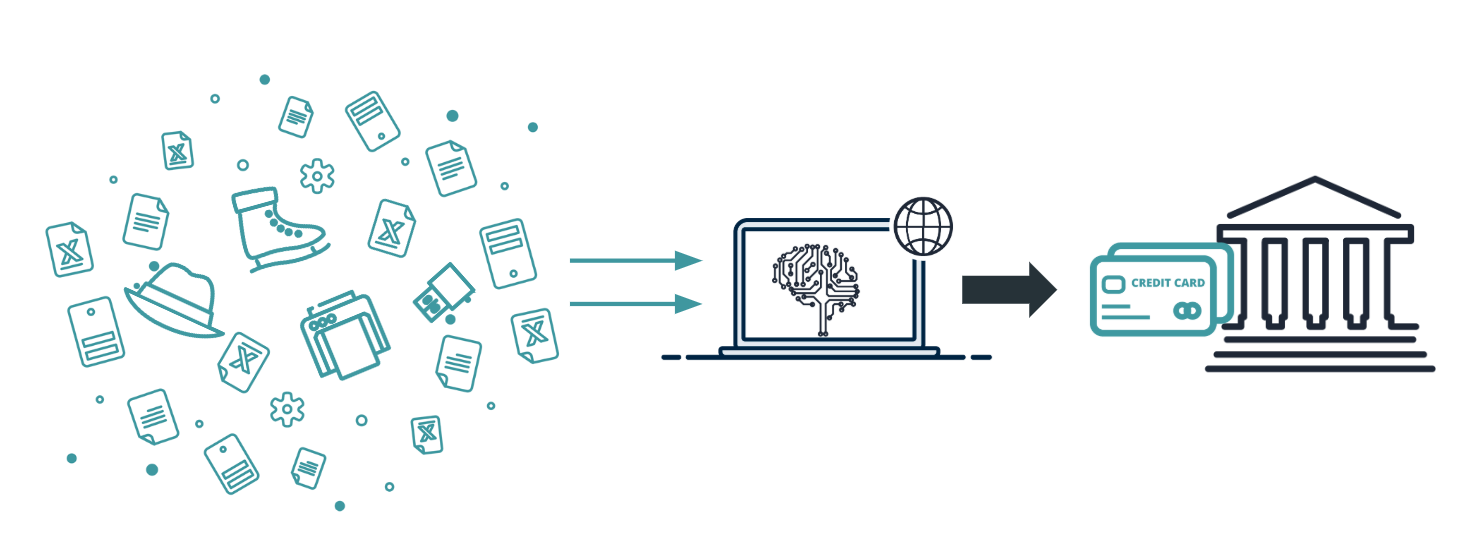

9. Insurance Claims

The use cases around insurance claims management cover processing of images, text and other unstructured data in order to predict an estimated cost of the loss. The role of an AI-based system suggests using historical data and comparing it to the predicted total loss cost in order to make a decision regarding the management of the claim.

While our experience does not yet cover software testing for the systems of this kind, there are some ideas from insurance industry that serve us well.

Humans hate risk and ambiguity. Zero-risk bias is a tendency to prefer the complete elimination of a risk even when alternative options produce a greater reduction in risk (overall).

From the software testing perspective, we frequently observe efforts to eliminate the risk in a single module or around a new functionality without proper end-to-end testing of the whole system.

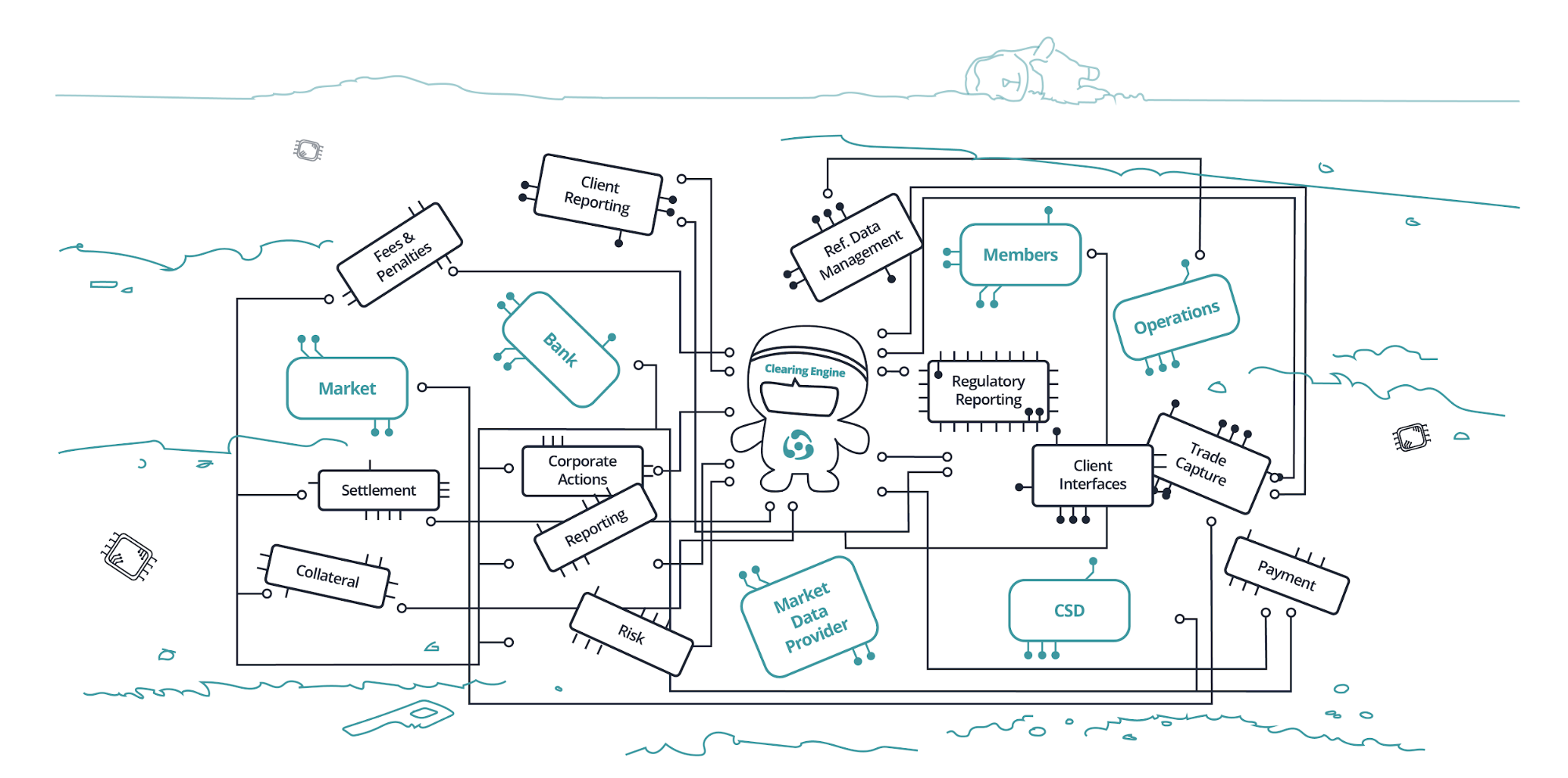

10. Non-deterministic Systems: Financial Market Infrastructures

Everyone is concerned by the unpredictability of AI based systems. Yet, humans tend to underestimate the complexity of the existing distributed non-deterministic platforms.

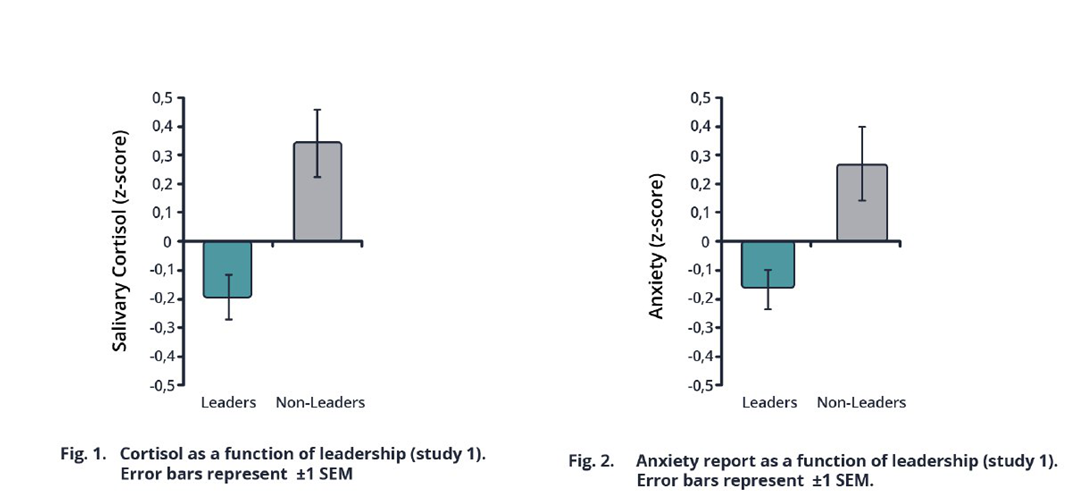

Thinking about complex distributed platforms built with traditional technologies as of less challenging compared to AI systems is an example of work of the illusion of control bias. It makes people happier and less stressed. Multiple studies prove it, though less stress not necessarily means better performance [19], [20].

References

[1] Financial stability implications from fintech: Supervisory and regulatory issues that merit authorities’ attention. A report by the Financial Stability Board. 27 June 2017. URL: https://www.fsb.org/wp-content/uploads/R270617.pdf.

[2] Artificial intelligence and machine learning in financial services: Market developments and financial stability implications. A report by the Financial Stability Board. 1 November 2017. URL: https://www.fsb.org/wp-content/uploads/P011117.pdf.

[3] FinTech and market structure in financial services: Market developments and potential financial stability implications. A report by the Financial Stability Board. 14 February 2019. URL: https://www.fsb.org/wp-content/uploads/P140219.pdf.

[4] Europe RCG discusses artificial intelligence, financial vulnerabilities and FSB work programme. A press release by the Financial Stability Board. 9 May 2019. URL: https://www.fsb.org/wp-content/uploads/R090519.pdf.

[5] Ethics guidelines on trustworthy AI. By the High-Level Expert Group on Artificial Intelligence, the European Commission. 8 April 2019. URL: https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=60419.

[6] Policy and investment recommendations for trustworthy AI. By the High-Level Expert Group on Artificial Intelligence, the European Commission. 26 June 2019. URL: https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=60343.

[7] Carsten Jung (BoE), Henrike Mueller (FCA), Simone Pedemonte (FCA), Simone Plances (FCA) and Oliver Thew (BoE). Machine learning in UK financial services. Joint report by the FCA and the Bank of England. October 2019. URL: https://www.bankofengland.co.uk/-/media/boe/files/report/2019/machine-learning-in-uk-financial-services.pdf.

[8] A4Q (Alliance for Qualification) AI and Software Testing Foundation Syllabus. Version 1.0. 17 September 2019. URL: https://www.gasq.org/files/content/gasq/downloads/certification/A4Q%20AI%20&%20Software%20Testing/AI_Software_Testing_Syllabus%20(1.0).pdf.

[9] Ng, Andrew. Machine Learning Yearning: Technical strategy for AI engineers, in the era of deep learning. Draft version. 2018. URL: https://www.deeplearning.ai/machine-learning-yearning/.

[10] Monetary Authority of Singapore. Annex A: Summary of the FEAT Principles. 2018. URL:https://www.mas.gov.sg/-/media/MAS/resource/news_room/press_releases/2018/Annex-A-Summary-of-the-FEAT-Principles.pdf.

[11] Ng, Andrew. A Foreword. The Batch. Essential news for deep learners. December 4, 2019. URL: https://blog.deeplearning.ai/blog/google-ai-explains-itself-neural-net-fights-bias-ai-demoralizes-champions-solar-power-heats-up.

[12] Salman, I. (2016). Cognitive biases in software quality and testing. Proceedings of the 38th International Conference on Software Engineering Companion - ICSE ’16. Pp. 823-826. URL: https://dl.acm.org/citation.cfm?doid=2889160.2889265.

[13] Mohanani, R., Salman, I., Turhan, B., Rodríguez, P., & Ralph, P. (2018). Cognitive Biases in Software Engineering: A Systematic Mapping Study. IEEE Transactions on Software Engineering. URL: https://ieeexplore.ieee.org/document/8506423.

[14] Darling, Kate and Nandy, Palash and Breazeal, Cynthia “Empathic Concern and the Effect of Stories in Human-Robot Interaction” (2015). Proceedings of the IEEE International Workshop on Robot and Human Communication (ROMAN), 2015. 6 p. URL: https://dspace.mit.edu/handle/1721.1/109059.

[15] Moskaleva, O., Gromova, A. Creating Test Data for Market Surveillance Systems with Embedded Machine Learning Algorithms. Proceedings of the Institute for System Programming, vol. 29, issue 4, 2017, pp. 269-282. URL: https://www.ispras.ru/en/proceedings/isp_29_2017_4/isp_29_2017_4_269/.

[16] I. Itkin et al., User-Assisted Log Analysis for Quality Control of Distributed Fintech Applications. 2019 IEEE International Conference On Artificial Intelligence Testing (AITest), Newark, CA, USA, 2019, pp. 45-51. URL: https://ieeexplore.ieee.org/abstract/document/8718213.

[17] Itkin, I., Yavorskiy, R. Overview of Applications of Passive Testing Techniques. In: Proceedings of the MACSPro Workshop 2019. Vienna, Austria, March 21-23, 2019, pp. 104-115. URL: http://ceur-ws.org/Vol-2478/paper9.pdf.

[18] Law of Triviality: Wikipedia, the free encyclopedia. 8 November 2019. URL: https://en.wikipedia.org/wiki/Law_of_triviality.

[19] Sherman, G. D., Lee, J. J., Cuddy, A. J. C., Renshon, J., Oveis, C., Gross, J. J., & Lerner, J. S. (2012). Leadership is associated with lower levels of stress. Proceedings of the National Academy of Sciences, 109(44), 17903–17907. URL: https://www.pnas.org/content/109/44/17903.

[20] Fenton-O’Creevy, M., Nicholson, N., Soane, E., & Willman, P. (2003). “Trading on illusions: Unrealistic perceptions of control and trading performance”. Journal of Occupational and Organizational Psychology, 76(1), 53–68. URL: http://web.mit.edu/curhan/www/docs/Articles/biases/76_J_Occupational_Organizational_Psychology_53_%28OCreevy%29.pdf.