Date:

By Iosif Itkin, CEO and co-founder, Exactpro

Introduction

The greatest interest to artificial intelligence (AI) methods and technologies has emerged over the last years in many areas of human activities directly or indirectly related to IT. Predictably, these areas include software testing and QA.

Software testing requires complex approaches to complex systems that work under high load and leverage the latest technologies available. Testing such systems requires the involvement of highly qualified IT specialists. For IT companies to find and employ such specialists and for the specialists themselves to prove their unique skills, the importance of internationally recognised certificates is unquestionable.

The International Software Testing Qualifications Board (ISTQB) has existed for years offering professional testers an opportunity to take a test and confirm their qualifications with a certificate. But for the professionals aiming at working in the software testing area, while, at the same time, applying AI to solving their tasks on a daily basis, a new exam has now emerged. It is called AI and Software Testing. The certification is provided by the Alliance for Qualification (A4Q).

Similarities and Differences between A4Q’s AI and Software Testing and ISTQB Foundation Level

Exactpro works with multiple partners. Last year, the company joined the Zero Outage organisation. Exactpro also participates in the FIX protocol working group that dedicates its efforts to developing best practices of monitoring, onboarding and testing of FIX-related financial applications. We collaborate with the GASQ centre of planning and certification and are a platinum partner of ISTQB.

Generally, there are two main schools of thought in regard to ISTQB and its value for the profession of a software tester. One of these schools declares that ISTQB is a nonprofit organisation that promotes software testing all over the world, sets minimum necessary standards of what a tester needs to know and helps people improve their skills and increase their pay. One of the best known representatives of this school of thought is, of course, Rex Black.

The second school of thought claims ISTQB being a “global conspiracy” meant to “destroy” the profession of a tester, and that it is more about earning money than anything else. The key industry specialists representing this school of thought are James Marcus Bach and Michael Bolton.

Nevertheless, ISTQB Foundation Level gives a general snapshot of knowledge and a glossary of terms that a tester or a developer may encounter in their work. It’s a much more extensive list of topics than a junior specialist comes across while starting to work on a specific project in a specific organisation. So, at the start of a career, one may find reading this syllabus very helpful for getting a comprehensive look at the subject area.

A4Q’s AI and Software Testing curriculum suggests that preparation typically takes 17 hours and 10 minutes. This is exactly 25 minutes more than the time required for ISTQB preparation. If we compare the content (the sheer volume of the text) of the ISTQB Foundation Level and the A4Q AI and Software Testing exams, the ratio is 64/40 in favour of ISTQB, which might suggest that, in reality, ISTQB Foundation Level requires longer to prepare.

vs

The main similarities between the two exams are listed below:

- Both exams contain 40 multiple-choice questions

- In order to pass, the applicant needs to answer at least 26 questions correctly

- Applicants are given 60 minutes for the exam; it’s possible to request an additional 15 minutes if the language of the exam is not the applicant’s native language, but this request has to be made in advance

- The same cost.

There are also significant differences:

- ISTQB’s exam is a result of 20 years of work. People have invested 20 years into polishing everything that is included in ISTQB Foundation Level. A4Q AI and Software Testing was written in two or three weeks, and this is obvious from reading the document. This exam is, essentially, a work in progress, a subject for future development. Exactpro has signed an NDA with ISTQB and GASQ aiming to work on the improvement of the learning materials and of the exam itself.

- A4Q and ISTQB differ as organisations: the latter has wider social support, whereas the former is a more exclusive organisation and more of a commercial one. Still, ISTQB’s plan is – and this is confirmed by GASQ – to take the existing A4Q exam and create an improved exam based on it, making it an official ISTQB exam.

The Essentials of the A4Q AI and Software Testing Certification

The A4Q AI and Software Testing syllabus can be downloaded from the test providers’ websites (GASQ, iSQI). It consists of three chapters:

- Basic aspects of AI

- Testing AI

- AI testing (i.e. using AI and Machine Learning to test software)

We will now review these chapters and most of their sub-chapters one by one.

1. Basic Aspects of AI

The first part of the exam gives a high-level representation of the knowledge related to the area of Machine Learning and testing. It covers the following topics:

- 1.1 What are Human Intelligence and Artificial Intelligence?

- 1.2 AI History

- 1.3 Symbolic AI

- 1.4 Sub-Symbolic AI

- 1.5 Some ML algorithms (Machine Learning) in More Detail

- 1.6 Applications and Limits of AI

1.1 What are Human Intelligence and Artificial Intelligence?

According to the Theory of multiple intelligences, at least eight different types of intelligence exist:

- Musical-rhythmic

- Visual-spatial

- Verbal-linguistic

- Logical-mathematical

- Bodily-kinesthetic

- Interpersonal

- Intrapersonal

- Naturalistic

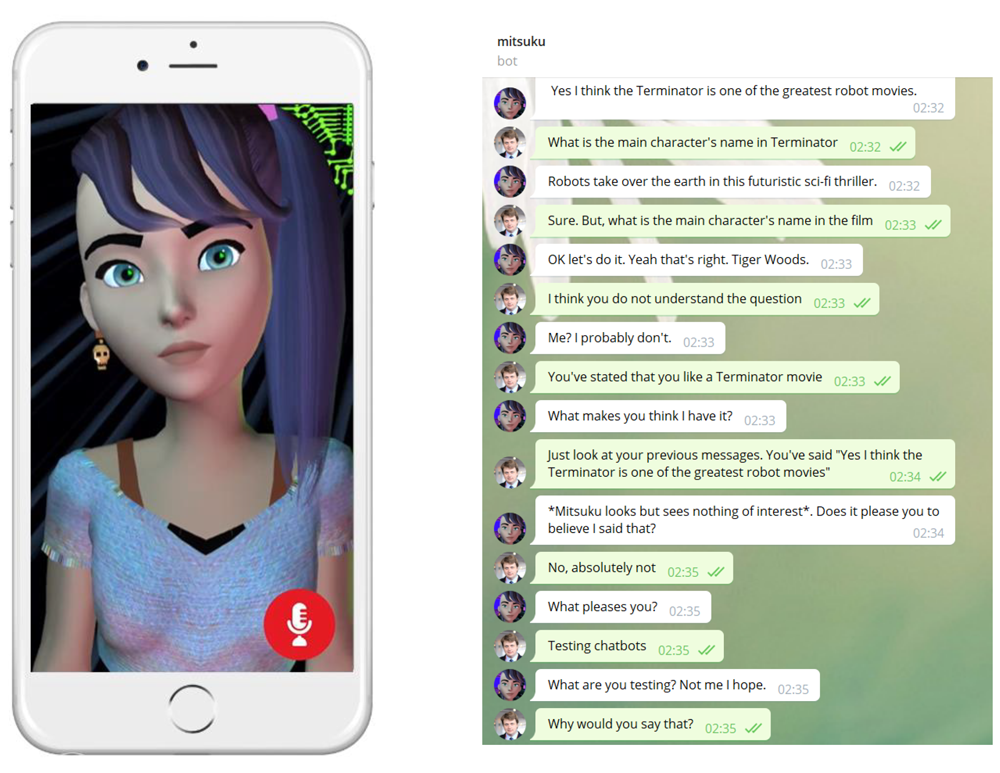

An article about it is included in the syllabus, and it is highly recommended to read it and understand how much human intelligence actually encompasses. For the artificial intelligence part, understanding the Turing Test concept is essential. Here is how my attempt of testing Mitsuku – a five-time winner of the Loebner Prize Turing Test – turned out:

1.2 AI History

This part of the syllabus contains some basic statements on when the term appeared, why at some moments AI “hibernated” and when it regained explosive growth. Comparing the syllabus with the respective Wikipedia page shows that Wikipedia gives all the necessary information for preparation. In fact, it has even more information than is included in the syllabus.

1.3 Symbolic AI

Symbolic AI means using the definitions and concepts of the subject matter and the operations of formal logic in order to arrive at conclusions. One of the simplest examples of Symbolic AI is a software library that analyses a mathematical formula entered by a user to figure out the correct order of operations. Formal verification of algorithms is another area of Symbolic AI.

For the purpose of the exam, the chapter on the topic included in the syllabus should be enough.

1.4 Sub-symbolic AI

When most people think of AI or ML, they are actually thinking of Sub-Symbolic AI. Sub-Symbolic AI is the type of AI that doesn’t deal with formal notions and only deals with data.

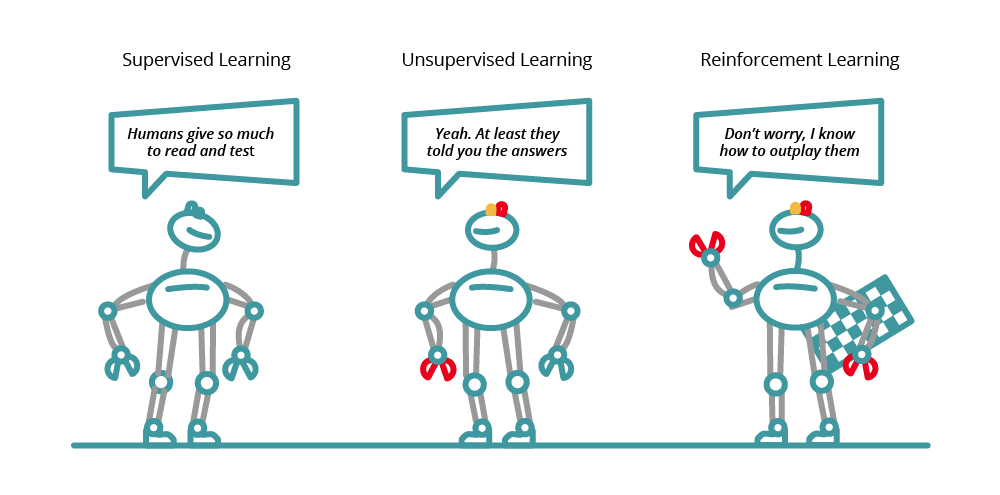

It can be broken up into three main types:

- Supervised learning is, for example, when we take pictures and mark them with, let’s say, “cats” and “dogs” labels. After that, the program can tell them apart and define with a certain degree of probability, what is in the picture.

- Unsupervised learning is, for instance, loading a large stream of data into the system and having it check whether the data has any anomalies by trying to break the stream of data into several clusters. This way, we receive some information about the data, but we don’t train the system: it trains itself.

- Reinforcement learning is when we continuously compare the model that we get against itself and against other models, and gradually improve it.

To pass the exam, it is important to understand how these three types of Sub-Symbolic AI differ and what techniques they comprise.

|

Learning Types |

Algorithms |

|---|---|

|

Supervised |

Bayes belief networks and naïve Bayes classifiers |

|

Unsupervised |

K-means clustering |

To fully comprehend it or just be more confident in these differences, you may have to go beyond the syllabus. A standard beginner Machine Learning course would reinforce your knowledge of the basic concepts. The exam is also bound to contain a question about the Naive Bayes Classifier. The following lecture is a great source of information on the topic.

1.5 Some ML algorithms (Machine Learning) in More Detail

This chapter dives a little deeper into the principles of work of the Bayesian belief networks, including the Naïve Bayes classifier, as well as Support Vector Machines, and K-means. This is accompanied by a throwback to the perceptron learning algorithm.

1.6 Applications and Limits of AI

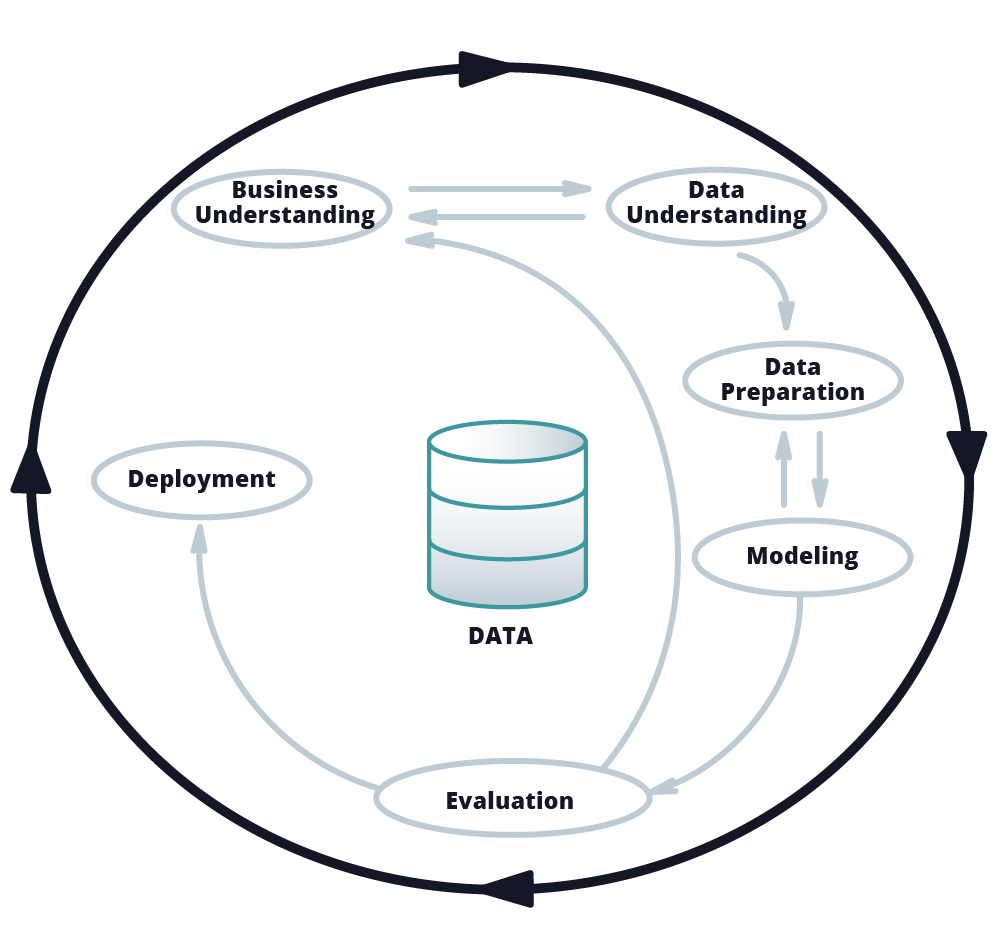

Unfortunately, the syllabus limits this chapter to the content of one article describing CRISP-DM. Although the concept behind the method is quite intuitive, the questions for this chapter are more focused on the terms than the concept itself.

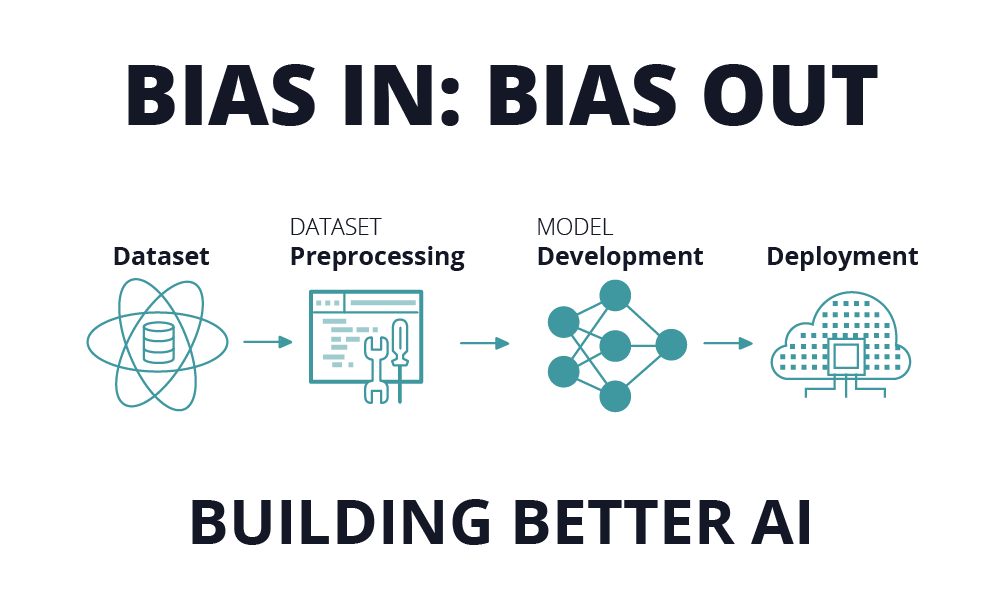

An important part of the exam and the syllabus is dedicated to the notion of bias – and cognitive bias – in AI. The information about it is widely available.

There are few videos dedicated to A4Q’s AI and Software Testing. One of them was recorded by Rex Black, the author of one of the most important books on the Foundation Level exam preparation. In this video, the author walks us through the syllabus and mostly concentrates on what a bias is, since at the exam, the probability of getting a question related to biases is extremely high.

Chapter 1.6 of the syllabus focuses on the ethics issues around AI. It’s been mentioned that Exactpro has been part of the initiative to transfer the A4Q exam from its commercial form into the realm of the global ISTQB board. A lot of preparatory work is being carried out to improve the training materials and the exam itself. But the work has been hindered for the following reason: currently, the textbook covers the ethics issues surrounding AI, while certain governments officially oppose the idea of it being a legitimate issue. On the other hand, the majority of countries believe that ethics issues are essential for AI, and they have to be included in the textbooks. So far, these countries cannot find a consensus.

2. Testing AI

2.1 General Problems with Testing AI Systems

This chapter describes the main problems related to testing AI. Four terms are introduced:

- Non-Deterministic

- Probabilistic

- Non-Testable

- The Oracle Problem

Normally, a Test Oracle allows us to answer the question of whether the test case works correctly or not. However, AI systems as well as other non-deterministic systems are often impossible to predict, which makes such systems impossible to test. Let’s say that the system is supposed to remove flash glare from photos. Assessing “how well” they have been removed is not a trivial task.

In addition, AI systems are generally prone to drift. At some point, the system may be functioning as expected, and then it may suddenly start behaving in a different way. Drift happens when the connection between the input and the output changes; this term is also included in the syllabus.

The main difficulty with testing AI-based systems is that, in many cases, they aren’t deterministic, and their behaviour can only be predicted with a certain degree of probability. Sometimes, they are not testable at all, i.e. it’s impossible to verify whether the system works correctly or incorrectly. They are subject to the Oracle problem, where it’s impossible to find the way to answer the question of “whether what we get is good or bad”.

There have been applications of AI to the mortgage industry, where there is no criterion allowing for easy distribution of people into “who gets a loan and who doesn’t”. If there was one, that would be an Oracle. But in most cases, such a system outputs a probability, and it’s impossible to say “yes” or “no” definitely.

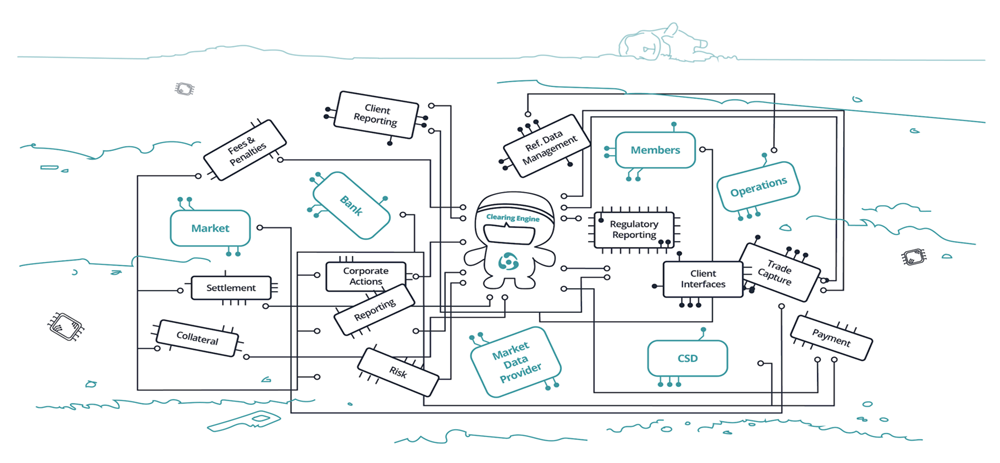

While these four problems may scare website testers whose verifications come down to writing Gherkin scenarios, those working with trading and clearing systems are used to them. These systems work under a high load: their testing isn’t limited to sending a message and verifying the response. Their testing involves fuzzing and carrying out tests at the confluence of functional and nonfunctional testing. The number of messages that needs to be sent can be as high as millions, and, as a result, it has to be clear whether the system works correctly or not. It’s impossible to predict what the particular answer will be in every particular case – it is, in many ways, not determined. Still, we are able to assure that it works correctly in some ways.

The methods applicable to testing complex systems are also applicable to testing AI systems. One such method is Model-based Testing. Another one is using indirect testing techniques. Direct testing methods imply that we know what answer we want to get to our direct message. An example of indirect methods is passive testing.

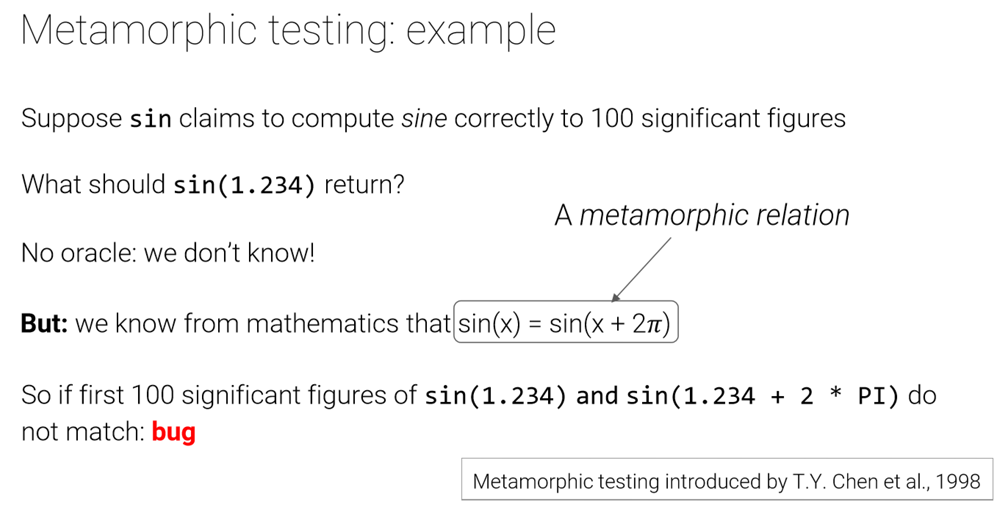

The syllabus provides an example of another testing method that is useful regardless of whether you are taking this exam or not, it is Metamorphic testing. It means verification of ratios that remain constant. Consider this example:

Now for a more practical example: when we upload a large number of transactions for complex securities trading among themselves into the system, it is impossible to predict with certainty which transactions will come from the system. But it is possible to state that the buying volume will equal the selling volume. If we see discrepancies between the two numbers (even though we don’t know the exact value that we should get), we can instantly see that something is wrong. If we expect the system to make some calculations, and we know that the ML-based algorithm should calculate an incremental daily increase, then the verification can consist in making sure that the value for the next day is higher than the value for the previous day, even if the exact value is unknown.

At Lehman Brothers, they relied on Monte Carlo analysis in calculating derivatives prices performed by hundreds of thousands of servers. There are two ways to test such calculations: one is to reproduce them, which would entail having the same amount of servers, the other is to rely on the known limitations of these calculations. And that would be another example of application of Metamorphic testing.

2.2 Machine Learning Model Training and Testing

As extra reading for Сhapter 2.2, I would recommend the Machine Learning Yearning book by Andrew Ng. In the book, the famous data scientist describes in detail the difference between a training set, a development set and a test set. It is not crucial in preparing for the exam, but it is very useful for understanding how systems work in Machine Learning.

2.3 AI Test Environments

This section is also limited to one article on the D-SOAKED model for Test Environment description, what it includes, how deterministic and how static it is, etc. The chapter mainly focuses on the terms connected with the model, and so will the questions for this chapter.

One very useful piece of information in the Testing AI part of the syllabus is the classification describing unique quality characteristics of AI systems:

- Ability to learn – discussed in detail in every machine learning textbook: how models are trained, what is bias, what is variance, etc. In the majority of cases, this is done by the team that develops the model itself.

- Ability to generalize – the ability of the system to work and react to scenarios it hasn’t seen before. (Do you remember that chick robot from Robocop that walked well in the room, but had trouble as soon as it approached the stairs? That’s a great illustration of AI’s ability to generalize).

- Trustworthiness. This metric is described in copious works, originating anywhere from the Pentagon to various regulators. How can we understand that we trust a machine learning system? What is the level of explainability? Do we consider it fair? Safe? Ethical? This issue is still a work in progress.

In essence, the verification of the ability to generalize is the scope of end-to-end and negative testing. The techniques applicable to conducting end-to-end and negative testing of complex large-scale systems are applicable to verifying AI systems’ ability to generalize.

3. AI Testing

3.1 AI in Testing

The last syllabus chapter – AI Testing – is about leveraging the techniques based on machine learning to testing all sorts of systems. This section starts with a detailed explanation of the Test Oracle concept that we touched upon earlier, with an emphasis of test design.

The chapter contains a really well-argumented lineup of cases of implementing AI for software testing. These range from bug triaging and optimising test runs to anomaly detection in GUI testing. There’s also a list of cases laying out the tasks that AI cannot be applied to. These are, among others, setting the Test Oracle or defining the user needs.

3.2 Applying AI to Testing Tasks and Quality Management (Exactpro experience)

Defect prioritization is one of the areas of AI application to testing. Anyone with a large project containing thousands of defects can implement this use case and has a potential training dataset. Exactpro’s Nostradamus analyses the description of a defect and outputs the probability of it being fixed within a certain period of time, rejected or not considered a defect. It is possible to change the description of the defect, and, thus, affect the probability of the time necessary for its fixing.

Supervised Learning is used in Exactpro’s functional testing tool Sailfish to train the tool to determine which message containing the failure cause is more likely to be correct. Out of this list of failed messages, we need to find the closest to the one containing the actual cause of failure – it will be the key to what went wrong. This technique is convenient when test volumes are high.

As for Unsupervised Learning, we perform clusterisation based on logs messages. After that, the system lets us see whether something went wrong in each set of clusters or draws our attention to a new cluster. Dividing a system into clusters simplifies the testing of a large system. Please see User-Assisted Log Analysis for Quality Control of Distributed Fintech Applications for a detailed description of the solution.

The syllabus also reviews the idea of using AI to create test data. A running example used is the This Person Does not Exist website, which is a set of two neural networks. One of them takes a large set of photographs and creates a completely new photograph. The other one evaluates the photograph for looking realistic and challenges the first network to improve on its output. As a result, every time someone visits a website, they see a new face that looks like a real photograph, but, in fact, is not that of an existing person. This brings us back to assessing the performance of AI-based solutions. How does one know that this system performs badly? What would be the test oracle?

3.3 AI in Component Level Test Automation

This, as follows from the name, is about applying AI on the component level. It is a much less trivial task than applying it on the system level. The reasons for that are listed in the syllabus; for example, when you do it on the system level, it is much easier to find the Oracle; also, it’s easier to set input and output data, they are often part of the system, there’re monitoring mechanisms in place, etc.

When you apply AI on the level of separate modules (classes), it is much more difficult. The syllabus makes a reference to an open-source solution that creates unit tests for Java classes, and then runs them and looks at the resulting test coverage.

Another application of AI is using it in GUI testing. Sometimes, the only way to retrieve information from a website’s grid is text recognition. Traditional test automation methods are less antifragile than AI. For example, a change in the internal structure like the location of a button or an inscription on it would not make little difference to an AI algorithm. On the contrary, traditional test automation would be derailed by these UI changes.

AIST (Artificial Intelligence for Software Testing) association is worth looking into for those interested in this topic, there are also a lot of publications about how to “outsmart” sites and applications. In fact, the majority of works about applying AI testing are about finding the difference between the ‘before’ and the ‘after’. A typical example would be test.ai.

3.5 AI-based Tool Support for Testing

The last part of the syllabus covers the problem of the existing tools for this kind of software. One has to be very sceptical about the vendors’ affirmation on what their AI systems are capable of doing for testing. There are multiple problems ranging from supportability to an explosive growth of test case numbers. The industry is working hard of creating ML-based tools for testing. At this stage, few of them work as described.

About the Author

Iosif Itkin is co-founder and co-CEO of Exactpro. Iosif manages business development and research in the field of high-load trading systems reliability. He is actively involved in delivering strategic software testing initiatives. Iosif has organized several industry conferences, including EXTENT - Software Testing and Trading Technology Trends Conference. He frequently speaks at worldwide FinTech events.

Iosif started his professional career in 2000 as a software engineer in a California-based software development company. For seven years, he worked as a Team Lead and a Technology Architect on projects for a number of US companies. In 2006, Iosif joined a company providing QA services in the financial sector. His extensive software development experience helped him to establish a Performance Testing Department specialising in testing high load trading systems. As a Technical Lead, Iosif was responsible for developing testing tools and implementing technical solutions for a number of leading exchanges and global investment banks. Iosif also has experience managing a consulting practice with a focus on advanced execution systems. As VP of Technology, he was responsible for implementing testing solutions on a number of complex projects for customers worldwide.

Iosif holds a Master of Science degree from the Theoretical Physics Department of the Moscow Engineering Physics Institute graduated summa cum laude.

About Exactpro

Exactpro specializes in quality assurance services and related software development with a focus on test automation for financial market infrastructures worldwide. Exactpro tools apply a variety of data analysis and machine learning techniques to improve the efficiency of automated functional testing executed under load. In May 2015 - January 2018, Exactpro was part of the Technology Services division of the London Stock Exchange Group (LSEG). In January 2018, the founders of Exactpro completed a management buyout from LSEG. Learn more at https://exactpro.com/.

Exactpro’s YouTube channel contains a lot of materials that could greatly complement the syllabus for this exam and enrich your knowledge of formal verification, software testing and software testing tools.